First month for free!

Get started

Published 9/22/2025

At its heart, an audio to text AI is a tool that takes spoken words from an audio file and turns them into written text. Think of it as a super-fast, highly accurate digital stenographer that can listen to a meeting, interview, or lecture and type out every single word. It’s all about turning messy, hard-to-use audio files into clean, searchable text you can actually do something with.

We’ve all been there—trying to find that one key comment someone made thirty minutes into an hour-long meeting recording. You end up scrubbing back and forth, listening and re-listening, wasting valuable time. It’s a frustrating manual process that absolutely kills productivity. This is precisely the problem that audio to text AI was built to solve.

Essentially, this technology is a tireless digital transcriber. It doesn't just hear words; it understands context and neatly converts spoken language into a structured text document. This guide is here to pull back the curtain on how it all works, not as some abstract science, but as a real-world tool for businesses, creators, and anyone who deals with audio.

We’ll start by breaking down the core concepts that make these systems tick. But don't worry, we'll skip the dense technical jargon and use clear analogies to show you how these complex ideas are turning spoken words into organized, usable data.

The move toward automated transcription is more than just a passing trend; it's a massive shift in how we handle information. The global speech-to-text API market was valued at around USD 4.42 billion in 2025 and is expected to nearly double, hitting USD 8.57 billion by 2030. That’s a compound annual growth rate of 14.1%, fueled by a growing need for smarter data processing across every industry. You can dive deeper into these numbers with the full Grand View Research industry analysis.

So, why is this happening so fast? A few key reasons stand out:

The real magic of audio to text AI isn't just turning speech into words. It’s about transforming fleeting conversations into permanent, searchable, and analyzable assets for any organization.

With that foundation, let's take a look at the benefits this technology brings to the table for both individuals and companies.

This table breaks down the core advantages of using AI for transcription, giving you a quick snapshot of its value.

| Benefit | Impact on Individuals | Impact on Businesses |

|---|---|---|

| Time Savings | Frees up hours spent on manual transcription for more creative or strategic work. | Boosts team productivity by automating a tedious, time-consuming task. |

| Cost Reduction | Avoids the high costs of hiring professional transcription services. | Reduces operational expenses and allocates budget to growth-focused areas. |

| Improved Accessibility | Makes content like podcasts and lectures available to a wider audience. | Ensures compliance with accessibility standards and broadens market reach. |

| Enhanced Searchability | Instantly find key moments in long audio or video files using keywords. | Unlocks insights from customer calls, meetings, and video archives. |

| Data-Driven Insights | Helps analyze personal interviews or research notes to identify key themes. | Enables sentiment analysis and trend identification from spoken customer feedback. |

This is just the beginning. This guide will take you deeper into the powerful features and real-world applications of this technology, showing you exactly how to pick the right tools and bring them into your own workflow.

Ever wonder how a machine can keep up with fast talkers, decipher thick accents, and even get the slang right? It's not magic, but the process is surprisingly a lot like teaching a child to speak. You start with basic sounds, string them together into words, and then use context to figure out what someone really means.

An audio to text AI doesn't "hear" in the human sense. Instead, it takes the raw data of a sound wave and runs it through a sophisticated, multi-step pipeline to turn it into text. Let’s pull back the curtain and see how the core components work together.

First up is Acoustic Modeling. Think of this as the AI’s ear—a highly trained sound detective. Its one and only job is to listen to the audio and chop it up into the smallest units of sound, called phonemes. In the word "cat," for example, the phonemes are the distinct sounds "k," "æ," and "t."

The acoustic model gets its smarts from training on vast libraries of recorded speech where every sound wave is meticulously mapped to its phoneme. This massive exposure is what allows the AI to recognize those fundamental building blocks no matter who is talking—whether they have a deep voice, a high-pitched one, or a strong regional accent. This first step, turning messy sound waves into clean phonetic data, is the bedrock of the whole operation.

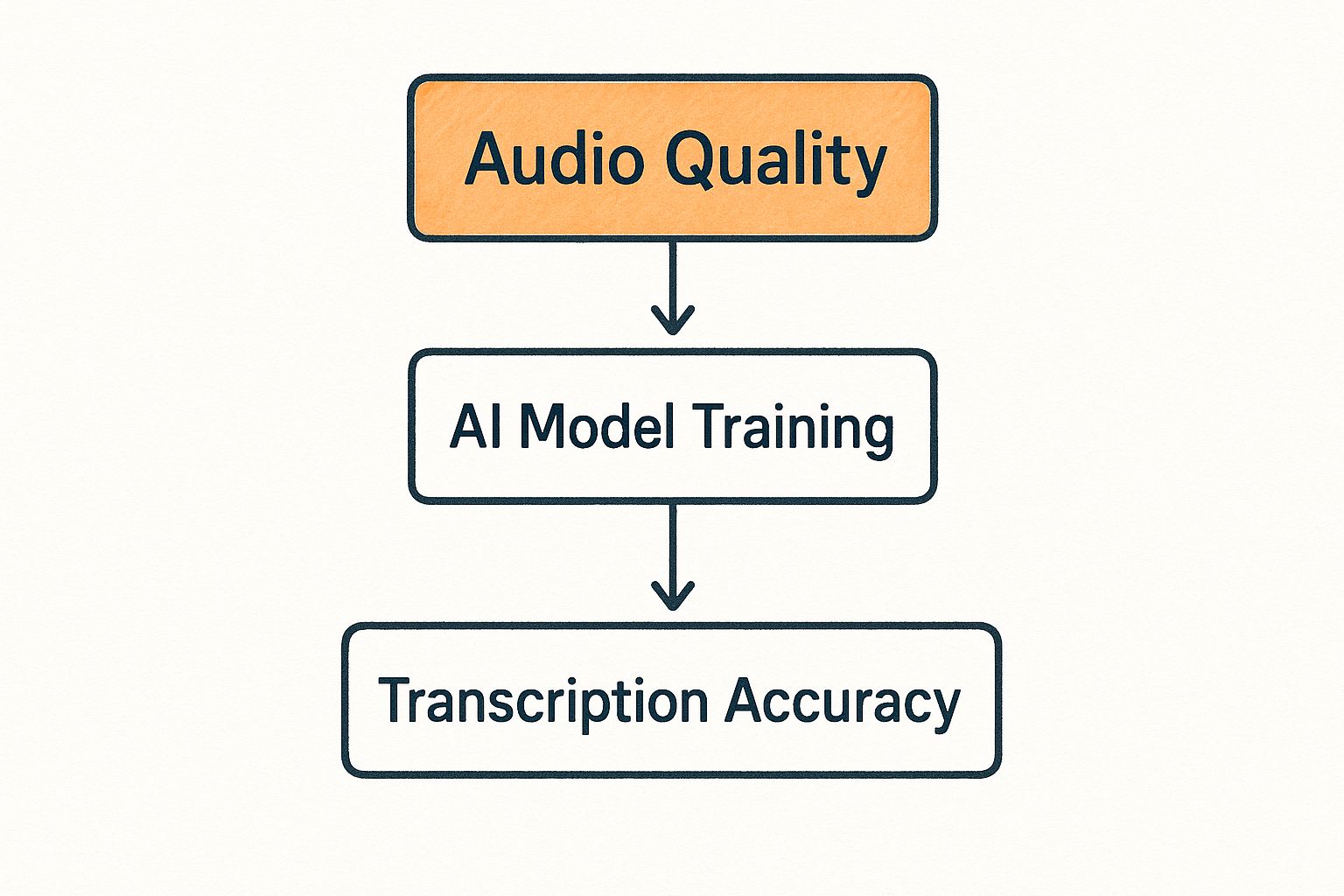

The diagram below shows how each stage builds on the last, all starting with the quality of the audio itself.

As you can see, everything hinges on that initial audio quality. Clear sound is the foundation for effective AI training, which ultimately dictates how accurate the final transcript will be.

Once the acoustic model has a string of phonemes, it hands them off to the Language Model. This component is like a supercharged predictive text engine. Its goal is to figure out the most likely sequence of words that those sounds could represent.

Let's say the acoustic model picks up the phonemes for "recognize speech." The language model knows that "recognize speech" and "wreck a nice beach" sound almost identical. How does it choose? By looking at the surrounding words and context. It calculates which phrase is statistically more probable in that specific sentence.

This predictive power is what helps the AI solve the constant ambiguity of human language. It ensures the output isn't just a jumble of phonetically correct words but a sentence that actually makes sense.

This is why today’s transcription tools feel so intuitive. They don't just hear; they anticipate.

The final layer of the puzzle is Natural Language Processing (NLP). After the language model puts together a likely sentence, NLP swoops in to add the finishing touches. This is where the AI moves beyond simply writing down words and starts to grasp their actual meaning.

NLP handles all the subtle rules of communication that we take for granted. Its main tasks include:

Working in concert, these three stages—acoustic modeling, language modeling, and NLP—create a powerful system that transforms spoken audio into structured, meaningful text. Each layer refines the work of the one before it, turning the complexities of human speech into data we can finally use.

It’s one thing to understand the tech behind an audio to text AI, but it's another thing entirely to know what to look for when you're actually picking one. The truth is, not all transcription services are built the same. The gap between a basic dictation app and a genuinely powerful platform is all in the features—the tools designed to tackle the messiness of real-world audio.

These features go way beyond just turning words into text. They add layers of context, clarity, and genuine usefulness to your transcripts. Let's dig into the essential and next-level capabilities you should expect from any serious transcription tool.

Before we get into the fancy stuff, let’s talk about the non-negotiables. These are the core features that make a transcription service reliable and usable. Think of them as the foundation of a house—without a solid one, everything else is shaky.

Here are the absolute must-haves:

These core features ensure your transcripts are accurate, practical, and easy to work with. For a deeper look at how different tools stack up, a good comparative review like this one on the 12 Best Speech to Text for Windows Apps can be really helpful.

Once you’ve got the basics covered, the advanced features are what separate the good from the great. These are the capabilities that solve the specific, often tricky, challenges that basic tools just can’t handle. They add a layer of intelligence that turns a simple transcript into a rich, structured source of information.

The real magic of modern transcription AI is its ability to understand not just what was said, but also who said it and what it means within a specific context.

This is where you'll find the biggest time savings and the most valuable insights.

One of the most powerful advanced features is speaker diarization. This is just a fancy term for technology that figures out who is speaking and when. Instead of getting a giant, confusing block of text, the AI labels each part of the conversation (like "Speaker 1," "Speaker 2"), creating a clean, easy-to-read script.

Imagine trying to transcribe a meeting with five different people. Without diarization, you’d have a wall of text that's almost impossible to follow. With it, you get a perfectly organized dialogue showing who said what. It's an absolute game-changer for meeting minutes, interviews, or analyzing customer support calls.

Another critical feature is the ability to add a custom vocabulary. Standard AI models are trained on general language, so they often trip over industry-specific jargon, company acronyms, or unique product names. A custom vocabulary feature lets you "teach" the AI a list of words relevant to your field, whether it’s medical terms, legal phrases, or technical specs.

By building out your own personalized dictionary, you can dramatically boost the accuracy for your niche content. This is how you ensure the AI transcribes "pharmacokinetics" correctly instead of guessing "farmer kinetics"—saving you from embarrassing and tedious manual corrections.

It's one thing to talk about the technology behind AI transcription, but where the rubber really meets the road is in its real-world applications. This is where the theory gets interesting—when it moves out of the lab and starts solving actual problems for people every day.

From bustling newsrooms to quiet hospital wards, automated transcription isn't some far-off concept anymore. It's a practical, hands-on tool that’s already changing how work gets done.

The numbers tell the same story. The AI transcription market was valued at around USD 4.5 billion in 2024, but it’s expected to explode to nearly USD 19.2 billion by 2034. This isn't just hype; it's a reflection of a real demand for smarter, faster ways to handle information. North America is currently leading the charge, making up over 35.2% of the global market in 2024. You can dig into the specifics in this complete AI transcription market report.

In the media world, speed and accuracy are everything. AI transcription has become a game-changer.

Imagine a journalist on a tight deadline. Instead of spending hours re-listening to an interview, they can get an instant transcript, pull out the perfect quote, and get their story filed. It’s a massive time-saver.

It's just as vital in entertainment. Production teams use AI to create subtitles and closed captions, opening up their films and shows to a global audience and those who are hard of hearing. A task that once took days of meticulous manual work now happens in a fraction of the time.

A few key uses in media stand out:

In medicine, getting things right is non-negotiable. Doctors have used dictation for years, but today's audio to text AI is on another level. A physician can speak their notes naturally after seeing a patient, and the AI transcribes them directly into the electronic health record (EHR), correctly interpreting complex medical terms.

By automating clinical documentation, AI frees up medical professionals to focus more on patient care and less on administrative tasks, ultimately reducing burnout and improving outcomes.

Education is another area seeing huge benefits. Students can record lectures and get back detailed, searchable notes. This is a lifeline for students with learning disabilities or anyone who just wants to review a key concept without scrubbing through an entire recording.

This screenshot from Lemonfox.ai shows just how straightforward the user experience can be. Even with powerful features like language selection and speaker diarization, the interface is clean and simple, making integration feel less intimidating for developers.

For businesses, AI transcription offers a direct line to customer insights. By transcribing support calls, companies can analyze customer sentiment, spot recurring problems, and evaluate agent performance. This isn't just data; it's a roadmap for improving the entire customer experience.

The legal field thrives on precision, and AI delivers. Lawyers are using transcription tools for depositions, court hearings, and client meetings. It's not only faster and more affordable than manual transcription, but it also creates a perfectly accurate, searchable digital record.

When you need to find one specific statement from hours of testimony, being able to search for it instantly can make all the difference in building a case.

With a ton of audio to text AI services out there, picking the right one can feel like a shot in the dark. The trick is to cut through the marketing noise and zero in on what actually matters for your specific situation. A little homework now will save you a world of headaches and hidden costs later.

This guide will walk you through the essential factors to weigh, giving you a clear framework to compare your options. From raw performance to data privacy, each piece of the puzzle is crucial to finding a tool that truly works for you.

Let's be blunt: accuracy is everything. While nearly every service boasts high precision, the truth is that performance can swing wildly based on audio quality, accents, background noise, and even the topic of conversation. Don't take their word for it—always test-drive a service with your own real-world audio files.

A solid benchmark to aim for is an accuracy rate of 95% or higher on clean audio. If your work involves a lot of technical terms or industry-specific jargon, look for tools that offer a custom vocabulary feature. This lets you teach the AI your unique terminology, which can make a massive difference in the final transcript.

An AI transcription tool is only as good as its output. If you spend more time fixing errors than the tool saves you, it has failed its primary purpose.

Speed is another huge factor. How long does it take to get your transcript back? For journalists on a deadline or content creators churning out meeting summaries, a fast turnaround isn't just a nice-to-have; it's essential.

Choosing the right transcription partner involves more than just looking at the price tag. This table breaks down the key features to evaluate, what to look for, and why each one is critical to making an informed decision.

| Evaluation Criteria | What to Look For | Why It Matters |

|---|---|---|

| Accuracy Rate | Published benchmarks (e.g., WER), performance on noisy audio, and user reviews. | This is the core function. Low accuracy means more manual correction, defeating the purpose of using an AI tool. |

| Custom Vocabulary | The ability to add specific names, acronyms, and technical terms to a dictionary. | Drastically improves accuracy for specialized content (medical, legal, tech) by preventing misinterpretation of jargon. |

| Speaker Diarization | The tool’s ability to correctly identify and label different speakers in the audio. | Essential for transcribing interviews, meetings, and podcasts where knowing "who said what" is crucial. |

| Speed (Turnaround Time) | How quickly transcripts are delivered (real-time vs. hours). | Time-sensitive workflows, like live captioning or news reporting, depend on near-instantaneous results. |

| Data Security & Privacy | End-to-end encryption, clear data usage policies (no training on your data without consent), and compliance (GDPR, HIPAA). | Protects your sensitive information and ensures you maintain control over your own intellectual property. |

| API & Integrations | A well-documented, robust API for developers and seamless connections with other software. | Allows you to build transcription directly into your own apps or connect it to your existing workflow (e.g., Slack, Zapier). |

| Pricing Model | Clear, transparent pricing—either pay-as-you-go, subscription-based, or a hybrid model. | You need a model that fits your usage patterns and budget without surprising you with hidden fees. |

By using these criteria as a checklist, you can systematically compare different providers and find the one that aligns perfectly with your technical needs, budget, and security requirements.

The cost of AI transcription generally comes in two flavors, and knowing the difference is key to keeping your budget in check.

When you're comparing costs, always be on the lookout for hidden fees. Some providers tack on extra charges for features like speaker identification or timestamping, while others bundle everything into one price. Dig into the details to understand the true cost of the service. You can also check out guides that review the best AI transcription apps for podcasts to see how different pricing structures stack up.

With data privacy being a major concern these days, security is something you absolutely cannot afford to ignore. When you upload an audio file, you're placing your trust in that company's security practices. Take the time to actually read their privacy policy.

Look for providers who offer end-to-end encryption and have a clear policy stating they won't use your data for AI model training without your explicit permission. If you're handling sensitive information, make sure the service is compliant with regulations like GDPR or HIPAA.

Finally, think about how this tool will plug into the way you already work. For developers, a solid, well-documented API is a must-have. It's what allows you to build transcription directly into your own software. A good API makes integration straightforward, opening up a whole new world of possibilities for your products and internal systems.

Knowing how AI transcription works is one thing, but actually weaving it into your daily operations is where you’ll see the real payoff. It's not just about uploading a file and getting text back. True integration means building a process that delivers accurate results, protects privacy, and just fits with how you and your team already get things done.

Making that leap from using transcription occasionally to having it fully embedded requires a little forethought. If you nail down a few best practices from the start, you can sidestep common frustrations and get reliable results from day one.

The quality of your transcript is completely dependent on the quality of your audio. The old saying "garbage in, garbage out" has never been more true. To give the AI a fighting chance, you need to feed it clean, clear sound.

Here are a few simple steps that make a huge difference:

Think of it this way: if a human would struggle to understand a conversation on a bad phone line in a noisy airport, an AI will too. A few small tweaks to your recording habits can dramatically improve your results.

For businesses and developers, the real magic happens when you build transcription directly into your own software. This is where a good API (Application Programming Interface) comes in. Instead of someone having to manually upload files to a website, an API lets your application communicate directly with the transcription service.

This opens up all kinds of doors. Imagine a video conferencing app that automatically generates meeting summaries. Or a customer support platform that can analyze the sentiment of every call. A media company could even make its entire video archive instantly searchable by spoken word.

Tools like Lemonfox.ai are built for this, offering simple, well-documented APIs that developers can easily work with. This means you can add powerful transcription features to your own products without needing a PhD in machine learning, creating a ton of new value for your users along the way.

As you start using transcription more, two critical points will come up: data privacy and the need for a human touch. When you're dealing with sensitive conversations—client meetings, patient details, or confidential company strategy—security isn't optional.

Always go with a provider that takes privacy seriously. Look for one that deletes your data right after processing and complies with regulations like GDPR. At the same time, for those mission-critical tasks where you need 100% accuracy, like legal depositions or medical notes, it's smart to have a human review step. The AI can do the heavy lifting and get the transcript 95% of the way there in minutes, and then a person can quickly scan it for any final corrections. This hybrid approach gives you the best of both worlds: the incredible speed of AI and the final polish of human understanding.

We've covered a lot of ground on AI-powered transcription, but you probably still have a few questions rolling around in your head. That's completely normal. This technology is moving fast, so let's clear up some of the most common queries people have when they start using these tools.

Think of this as your quick-fire Q&A, designed to give you straightforward answers to the practical stuff.

This is the big one, isn't it? The short answer is: modern AI models can be incredibly accurate, often hitting 95% accuracy or even higher. But there’s a massive "if" attached to that number—it all hinges on the quality of your audio.

Give the AI a clean recording from a good microphone in a quiet room, and you'll get a near-perfect transcript.

But the real world is messy. Accuracy can take a hit from things like:

The best services overcome this last point by letting you add a custom vocabulary, which makes a world of difference for specialized audio.

Handing over sensitive audio files to an online service can definitely feel like a leap of faith. Security is a huge deal, and any provider worth their salt knows it. Always look for services that use strong, end-to-end encryption to protect your data both on its way to them and while it's stored on their servers.

Beyond that, you need to read the fine print. Dig into the privacy policy to find out if the company uses your data to train its AI models. You should always have the final say on that.

When dealing with confidential information, stick with providers who are upfront about their data practices, offer compliance with standards like GDPR or HIPAA, and have clear policies for deleting your files. Your privacy shouldn't be an optional extra.

Yes, and this is where the magic really happens. This feature is called speaker diarization (or speaker labeling), and it's essential for any recording with more than one voice. Instead of spitting out a single, confusing block of text, the AI identifies who is speaking and when, labeling each part of the conversation (e.g., "Speaker 1," "Speaker 2").

This makes transcripts genuinely useful for things like:

Without it, you're left with a transcript that’s almost impossible to follow. With it, a chaotic conversation becomes a clean, organized script. It’s one of the key features that separates a basic tool from a professional-grade one.

Ready to see how fast, accurate, and secure transcription can be? With Lemonfox.ai, you can integrate a powerful speech-to-text API into your workflow for less than $0.17 per hour. Start your free trial and get 30 hours of transcription to see the difference for yourself at https://www.lemonfox.ai.