First month for free!

Get started

Published 9/20/2025

Picking the best speech recognition API really comes down to what your project needs. You've got the usual suspects—Google, AWS, and Microsoft—but also specialists like Lemonfox.ai that are shaking things up. While the tech giants give you a massive ecosystem to plug into, the best choice is often a balancing act between accuracy, speed, cost, and specific features like speaker diarization or custom vocabularies.

Choosing a speech-to-text API isn't just a technical detail; it's a strategic move. The API you pick directly affects your user experience, operational budget, and the overall quality of your product. In a market flooded with options, knowing the subtle differences between providers is the key to avoiding expensive mistakes and frustrating development cycles.

And this market is absolutely booming. It was valued at around USD 3.87 billion in 2024 and is expected to hit nearly USD 9.1 billion by 2029. This explosive growth is fueled by everything from remote work and e-learning to the countless IoT devices that depend on voice commands. You can read more about the speech-to-text market growth on grandviewresearch.com.

To get you started, let's look at the major players and where they shine. While every API on this list can handle transcription, they're not all created equal. Some are built for sprawling enterprise systems with tight cloud integration, while others focus purely on delivering the best accuracy and a straightforward developer experience.

Before we get into the nitty-gritty details, this table offers a quick snapshot to help you see which solutions might be a good fit for your goals right off the bat.

| API Provider | Key Strength | Ideal Use Case | Pricing Model |

|---|---|---|---|

| Google Speech-to-Text | Massive language support & model selection | Global applications needing broad dialect coverage | Pay-as-you-go, tiered |

| Amazon Transcribe | Deep integration with the AWS ecosystem | Businesses heavily invested in AWS services | Pay-as-you-go, tiered |

| Microsoft Azure | Strong enterprise features & security | Corporate environments requiring compliance | Pay-as-you-go, tiered |

| Lemonfox.ai | High accuracy & cost-effectiveness | Startups and developers needing affordable quality | Simple pay-as-you-go |

Think of this as the starting line. In the sections that follow, we'll dig deeper into the criteria that really matter—from Word Error Rate (WER) to real-time latency—so you can move from a general idea to a confident final decision.

When you're picking a speech-to-text API, you have to look past the flashy marketing and get into what actually matters for performance. To really stack them up against each other, you first need to get a handle on what affects speech-to-text accuracy and how different variables can throw off the results. Think of this as your practical checklist.

The first thing everyone talks about is accuracy, and for good reason. It’s usually measured by Word Error Rate (WER), which is just a fancy way of counting the mistakes—words added, missed, or swapped—in a transcript. A lower WER is obviously better, but that number doesn't tell the whole story.

Performance can take a nosedive depending on the audio quality. An API might work brilliantly on a crisp podcast recording but completely fall apart with noisy street sounds, overlapping speakers, or thick accents. This is why you absolutely have to test these APIs with your own real-world audio. It's not optional.

If you're building something that needs an instant response, like live captions or a voice assistant, then latency becomes your make-or-break metric. This is the time it takes from the moment someone speaks to when you get the text back. Too much lag, and the user experience falls apart.

When you're testing for speed, keep an eye on these things:

Streaming APIs are built for this kind of real-time work. The trade-off is that they sometimes sacrifice a tiny bit of accuracy for speed, since they process audio in small pieces without the benefit of full sentence context.

The API you choose has to grow with you, whether you’re handling a few hundred audio files a day or a few million. Scalability is all about making sure the service can keep up with demand without slowing down or crashing. The big cloud players like Google and AWS are known for this, but many specialized APIs are also built to handle massive loads.

Once you’ve covered the basics, the advanced features are where you’ll find the real game-changers.

An API's true power often lies in its specialized capabilities. Features like speaker diarization, which identifies who spoke when, or custom vocabulary, which improves accuracy for industry-specific terms, can be the deciding factor for complex use cases like medical dictation or call center analytics.

Here are a few other features that can make a huge difference:

A smart evaluation weighs all of these factors against what your project actually needs. It’s about finding an API that not only works well but also fits perfectly into your workflow.

Choosing the right speech recognition API isn't just about picking the one with the highest advertised accuracy. You have to get your hands dirty and compare the market leaders directly. Real-world performance can swing wildly depending on everything from audio quality and background noise to accents and industry-specific jargon.

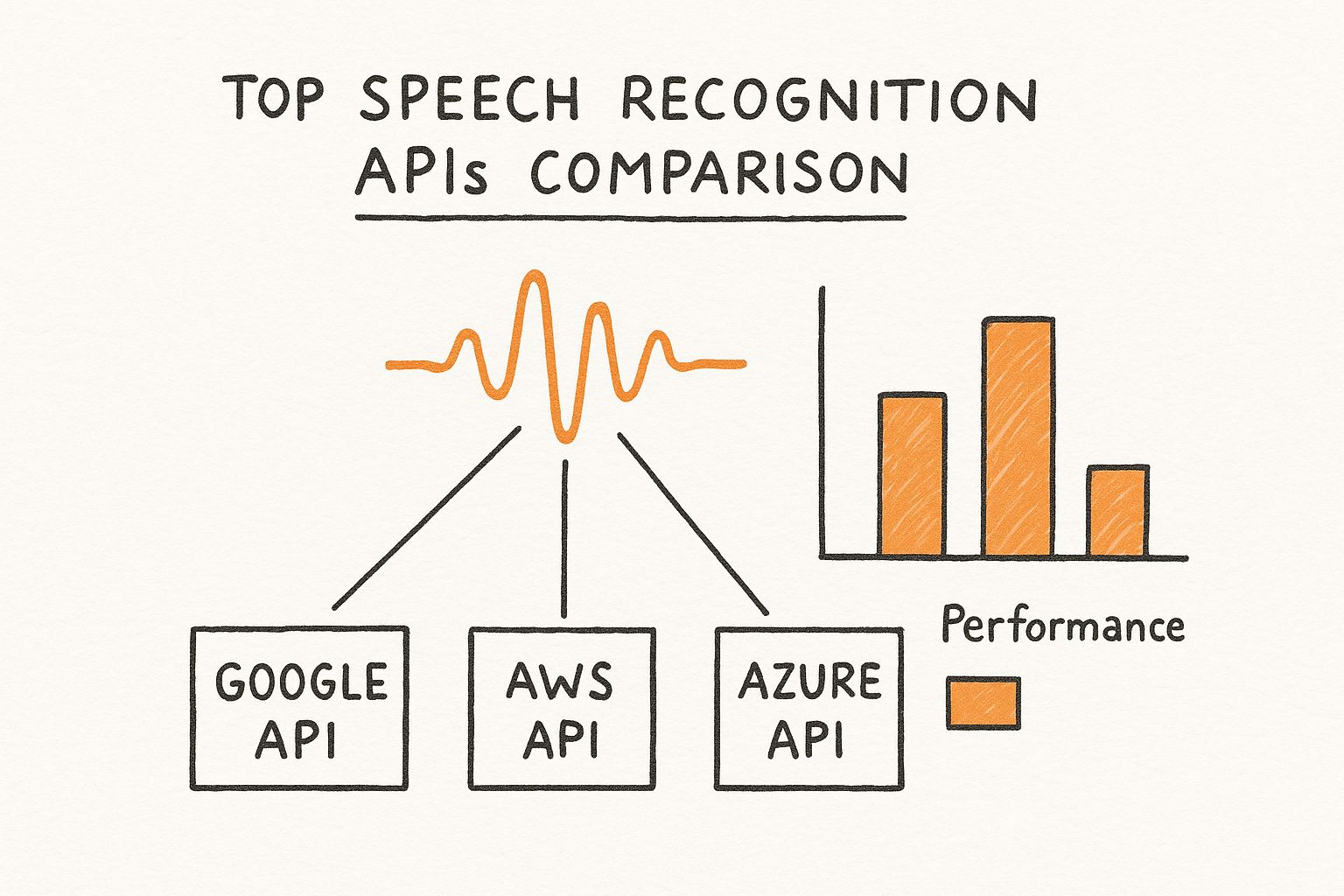

Let’s put four of the top contenders under the microscope: Google Cloud Speech-to-Text, Amazon Transcribe, Microsoft Azure Speech to Text, and Lemonfox.ai. We'll break down how they actually stack up where it matters most.

This graphic gives a high-level view of how these APIs work to process audio data.

While they all perform the same basic function, the models and architecture under the hood are what create very different outcomes for developers on the front lines.

Google has been a giant in this space for a long time, and its main strength has always been its incredible language support and a wide array of transcription models. It offers specialized models for things like phone calls, video, and even medical dictation, giving developers the tools to fine-tune transcription for specific situations. That kind of flexibility is a huge win for global applications dealing with diverse dialects.

The catch? Recent benchmarks show Google’s standard API is starting to lag behind newer competitors in raw accuracy, especially when you throw challenging audio at it. And while the feature list is impressive, the developer experience can feel clunky. You often have to navigate a complex setup within the Google Cloud Platform just to get your first file transcribed.

Amazon Transcribe’s killer feature is its seamless connection to the sprawling AWS ecosystem. If your entire tech stack is already living on AWS—with files in S3 and apps on EC2—then plugging in Transcribe is incredibly straightforward. It just works, keeping your entire workflow under one convenient roof.

In terms of performance, Transcribe is a solid, reliable workhorse for common tasks like transcribing customer support calls or media files. It comes packed with useful features like automatic language identification and speaker diarization. It might not always win the gold medal for accuracy, but its dependability and deep AWS integration make it the default choice for many established businesses. For a deeper dive into the market, check out this comprehensive speech to text software comparison.

Microsoft Azure built its speech-to-text service with the enterprise squarely in its sights. It shines when it comes to security, compliance, and deep customization, making it a natural fit for large companies in regulated fields like finance or healthcare. Azure’s ability to build custom speech models trained on your proprietary data can be a game-changer for getting domain-specific terms right.

The platform also has powerful tools for building out voice-enabled applications. But, like its big-cloud rivals, getting started can be a complex affair. Its performance is generally consistent, though it can struggle to keep up with more specialized APIs when dealing with heavy accents or noisy environments.

Key Differentiator: The choice between the cloud giants often boils down to your existing ecosystem. Google gives you model variety, AWS offers unparalleled integration, and Azure brings enterprise-grade security and customization to the table.

Unlike the big cloud providers trying to be everything to everyone, Lemonfox.ai has one mission: deliver the absolute best accuracy through a simple, developer-friendly API. This sharp focus means it often blows general-purpose models out of the water, particularly with messy, real-world audio full of background noise and varied accents. For startups and developers where the quality of the transcript is non-negotiable, this is a massive advantage.

Lemonfox.ai also wins on simplicity. The pricing is straightforward, and the API is incredibly easy to use, letting you skip the complicated setup rituals of the larger platforms. You can be up and running in minutes, making it a fantastic choice for teams that need to ship fast without compromising on quality. This specialized approach is tapping into a market that's exploding—forecasts show the speech-to-text API market was valued at USD 5 billion in 2024 and is on track to hit USD 21 billion by 2034, growing at a CAGR of 15.2%.

To really understand the trade-offs, a side-by-side technical comparison is essential. This table breaks down the key features and capabilities of each API, highlighting their strengths and ideal use cases.

| Feature | Google Speech-to-Text | Amazon Transcribe | Azure Speech to Text | Lemonfox.ai |

|---|---|---|---|---|

| Best For | Global apps, model variety | AWS-native workflows | Enterprise, custom models | High accuracy, startups |

| Real-Time Streaming | Yes, with multiple models | Yes, with low latency | Yes, highly configurable | Yes, optimized for speed |

| Speaker Diarization | Yes | Yes, with speaker labels | Yes | Yes |

| Custom Vocabulary | Yes, with adaptation | Yes | Yes, with advanced tuning | Yes |

| Developer Experience | Moderate, requires GCP setup | Moderate, requires AWS setup | Complex, enterprise-focused | Simple, fast integration |

In the end, the "best" API truly depends on what your project needs. If you're already all-in on the AWS ecosystem, Transcribe is a no-brainer. For an enterprise that needs deep customization, Azure is a powerhouse. But if your top priority is getting the most accurate transcript possible from a simple, cost-effective API, Lemonfox.ai makes a very compelling argument.

Benchmarks and leaderboards are a good starting point, but they don't tell the whole story. The best speech recognition API is simply the one that solves your problem with the least amount of friction. An API that flawlessly transcribes a pristine podcast recording might completely fall apart when faced with the messy, overlapping audio from a call center.

Getting this choice right is critical because it impacts everything downstream. An API with high latency will make a voice command feature feel clunky and unresponsive to users. Similarly, one with so-so accuracy could create huge compliance headaches if you're transcribing legal depositions. The real goal here is to look past the marketing claims and match the tool to the task at hand.

The demand for this tech is exploding for a reason. Valued at USD 10.46 billion back in 2018, the voice recognition market is on track to triple by 2025. This growth is fueled by AI improvements that are finally making these tools practical for everyday business needs. You can dig into more data on the growth of voice recognition technologies at grandviewresearch.com.

When you're trying to make sense of call center recordings, a few things are non-negotiable: you need to know who said what (speaker diarization), it has to work on low-quality phone audio (8kHz), and it must recognize your company's unique product names and jargon. The whole point is to figure out what customers are feeling, how agents are performing, and what trends are emerging from thousands of calls.

But if raw accuracy on those tough calls is your number one priority, Lemonfox.ai often pulls ahead. It can deliver cleaner transcripts from challenging audio without the heavy infrastructure of a major cloud provider.

For subtitling videos or podcasts, you need more than just accurate words—you need proper punctuation and formatting that makes sense to a reader. The audio itself is a mixed bag, ranging from crystal-clear studio dialogue to chaotic on-the-street interviews filled with background noise.

For media, the API has to be tough. It can't get tripped up by background music or street noise. An error rate that looks small on a spreadsheet can become a glaring, nonsensical distraction when it pops up on screen.

When you’re building voice controls for an app, a smart home device, or an in-car system, one thing matters more than anything else: speed. The response has to feel instant. If there's a delay, the experience is broken. Accuracy on short phrases is key, but it has to happen in the blink of an eye.

The game changes here. You aren't transcribing a long conversation; you're trying to catch specific trigger words and commands in near real-time.

In fields like healthcare and law, accuracy isn't a nice-to-have; it's a hard requirement. One wrong word in a doctor's note or a legal transcript can have massive consequences. These use cases demand the absolute lowest Word Error Rate (WER) possible and need to handle dense, specialized terminology without stumbling.

That said, if your main goal is getting the most accurate transcript of complex terms, an API like Lemonfox.ai can often beat the general-purpose models. It’s a compelling option if you can manage sensitive data within your own secure environment.

When you're picking a speech recognition API, cost is always a huge factor. But here's the catch: the sticker price per minute is rarely the whole story. Pricing models can be a real maze of pay-as-you-go rates, confusing tiers, free credits, and nickel-and-dime charges for premium features. It makes a true apples-to-apples comparison almost impossible unless you know what to look for.

To figure out what you'll actually spend, you have to think in terms of total cost of ownership (TCO). This means adding up every single potential expense, from setup and data transfer to every feature you'll use. What looks like a bargain at first can easily become a budget-buster once all the hidden costs pile up.

Think of the advertised per-minute rate as just the starting line. Many providers lure you in with a low number, only to add extra charges for the very features that make their service useful. You absolutely have to read the fine print.

Here are a few of the most common hidden costs to keep an eye out for:

These "small" charges add up fast, particularly when you're operating at scale. A service with a slightly higher base rate but all-inclusive features often turns out to be the more economical choice in the end.

A common pitfall is underestimating the cost of essential features. An API might offer a low transcription rate, but if you have to pay extra for speaker labels and punctuation on every call, your final bill could be double what you budgeted for.

To make a smart decision, you need to run the numbers based on your real-world needs. Don't just compare single-minute prices; build a scenario that mirrors your actual workload.

Let’s take a common example: a business that needs to transcribe 10,000 minutes (about 167 hours) of two-person audio calls each month, which absolutely requires speaker diarization.

Here’s how the math might shake out:

| Provider | Base Rate (per hour) | Feature Cost (Diarization) | Estimated Monthly Total (167 hours) |

|---|---|---|---|

| Provider A | $0.37 | Included | $61.79 |

| Provider B | $0.30 | +$0.15/hour | $75.15 |

| Provider C | $0.24 | +$0.20/hour | $73.48 |

As you can see, the provider with the lowest base rate isn't the cheapest overall once feature costs are factored in. Provider A, with its inclusive pricing, wins.

This is exactly why a transparent, all-in-one pricing model—like the one from Lemonfox.ai—is so refreshing. By bundling key features into one simple, competitive rate (under $0.17 per hour), they take the guesswork out of the equation and let you predict your expenses accurately. This straightforward approach lets you focus on building your product, not trying to decipher a complex monthly bill.

While the tech giants provide massive, do-everything platforms, specialized tools almost always deliver better results by focusing on a single problem. This is exactly where Lemonfox.ai enters the conversation. It’s a powerful alternative for developers who need the best speech recognition API without the overhead and confusing costs of a full cloud suite.

Lemonfox.ai’s entire platform is built from the ground up for one thing: state-of-the-art transcription accuracy. It’s not just another feature bundled into a huge ecosystem; it’s engineered specifically for messy, real-world audio.

This singular focus means it often beats general-purpose models, especially with tricky audio filled with background noise, diverse accents, or poor recording quality. If the transcript's integrity is non-negotiable—think media subtitling or analyzing user-generated content—that specialized accuracy is a game-changer.

Another area where Lemonfox.ai really shines is its refreshingly simple and transparent pricing. So many developers get burned by the confusing billing of major cloud providers, where essential add-ons like speaker diarization unexpectedly drive up the bill. Lemonfox.ai bundles these critical features into one clear, competitive rate, making your costs completely predictable.

The screenshot below, taken from their homepage, gets straight to the point.

This visual instantly tells you what they're about: top-tier performance at a fraction of the cost you'd expect.

That straightforward philosophy is baked right into the API design. You can integrate it and start transcribing in minutes, skipping the convoluted setup and IAM permission nightmares common with bigger platforms. The goal is to get you productive, fast, without needing a dedicated engineer just to manage the integration.

For startups and agile teams, speed is everything. An API that just works—delivering incredible accuracy without a steep learning curve or surprise bills—is an invaluable tool for accelerating product development.

Lemonfox.ai makes a strong case for picking a specialist. It’s built for projects where transcription quality is paramount and developer time is a precious resource. By cutting out the bloat and focusing on delivering the most accurate transcripts through a dead-simple API, it stands out as a genuine alternative to the big names.

Diving into speech-to-text can feel a bit overwhelming, and you're bound to have questions. Whether you're hunting for the best speech recognition API or just trying to get your bearings, let's clear up a few of the most common ones.

This is the million-dollar question, and the honest answer is: it completely depends on your audio.

While big players like Google have solid, general-purpose models, you'll often find that a specialized service like Lemonfox.ai pulls ahead, especially with tricky audio—think background noise, heavy accents, or industry-specific jargon. The only way to know for sure is to run your own bake-off. Test a few top contenders with your actual audio files to see who really delivers.

Pricing is all over the map. Most services operate on a pay-as-you-go model, billing you for every minute of audio you process.

The final bill usually depends on a few factors: the specific AI model you choose, whether you need real-time or batch transcription, and if you’re using advanced features like speaker diarization. The good news is that many providers, including Lemonfox.ai, give you a healthy free tier to experiment with before you commit.

A key takeaway is to look beyond the base rate. An API with a slightly higher per-minute cost but inclusive features can often be more cost-effective than a cheaper one with numerous add-on fees.

Getting an API up and running is usually pretty painless. At its core, you're just making a secure HTTP request from your app to the API's endpoint.

To make it even easier, providers offer official Software Development Kits (SDKs) for popular languages like Python, JavaScript, and Java. The process is straightforward:

This setup means you can get from zero to a working integration in no time.

Ready to see what a simple, powerful, and accurate API can do for your project? Start transcribing for free today with Lemonfox.ai and experience the difference yourself.