First month for free!

Get started

Published 10/5/2025

When you're looking for the best speech to text software, a few names consistently come to the top. For developers and businesses focused on top-tier accuracy without breaking the bank, Lemonfox.ai is a standout choice for API-driven tasks. Then you have giants like Google Speech-to-Text with its massive, scalable infrastructure, and OpenAI's Whisper for those who want a powerful, open-source model.

The right decision really comes down to what you're trying to achieve. It’s all about balancing cost, how easily the tool fits into your workflow, and whether you need specific features like identifying different speakers.

Let's be honest, picking the "best" transcription tool isn't about finding one single winner for everyone. It's about matching the right technology to your specific goal. A journalist transcribing a podcast with multiple guests has completely different needs than a developer building a voice command feature into an app.

This guide is designed to cut through the marketing fluff and focus on what actually matters in the real world. We'll evaluate the top platforms based on a few core principles that truly dictate their performance day-to-day.

To give you a quick overview, the table below summarizes the key players we'll be dissecting. Think of it as a starting point to see which platforms might be a good fit before we get into the nitty-gritty details.

| Software | Best For | Key Differentiator | Pricing Model |

|---|---|---|---|

| Lemonfox.ai | Developers & Businesses Needing High Accuracy on a Budget | Unbeatable price-to-performance ratio and developer-friendly API | Pay-as-you-go |

| Google Speech-to-Text | Large-Scale Enterprise Applications | Deep integration with the Google Cloud ecosystem and extensive language support | Tiered pay-as-you-go |

| OpenAI Whisper | Researchers & Hobbyists Needing a Powerful Open-Source Model | State-of-the-art accuracy from a self-hosted, open-source model | Free (requires self-hosting) |

This at-a-glance comparison helps frame the conversation. Now, let's dive deeper into how each of these solutions performs in practice.

To really pick the right speech-to-text software, it helps to peek behind the curtain at the tech doing all the heavy lifting. The core engine is something called Automatic Speech Recognition (ASR), a field that’s been completely transformed by AI and machine learning in recent years.

If you remember the clunky dictation tools of the past, you know they struggled with accents, background noise, or even just fast talkers. Today’s ASR is a different beast entirely. It relies on sophisticated neural networks trained on mountains of spoken data. They don't just "hear" words; they understand context, making them smart enough to know when you mean "write" versus "right."

This leap forward is why the global ASR market is set to skyrocket from USD 4.41 billion in 2024 to a massive USD 59.4 billion by 2035. It’s being adopted everywhere—from hospitals to call centers—proving just how vital this technology has become.

The brain of any ASR system is its machine learning model. Imagine it as a student that has listened to millions of hours of audio, learning to connect the tiniest sounds (phonemes) into words and coherent sentences.

When it comes to these models, there are two main philosophies:

This is a critical distinction. A generalist model is a jack-of-all-trades, but a specialized one is a master of one. For anyone wanting a deeper technical breakdown, the articles on Parakeet AI's blog for technical insights are a great resource.

Key Insight: The choice between a generalist and a specialized model is the single biggest factor in transcription accuracy. If your audio is packed with industry-specific terms, a service using a specialized model—like those from Lemonfox.ai—will almost always give you better results. It catches the niche vocabulary that a generalist tool simply won't recognize.

Seeing a 95% accuracy rate on a website looks great, but that number doesn't tell the whole story. Real-world conditions can dramatically change how well a model actually performs.

Getting a feel for these variables is how you spot the subtle but crucial differences between services.

At the end of the day, understanding these fundamentals lets you see past the sales pitch and judge speech-to-text software on what really counts: the technology driving its performance.

Picking the right speech-to-text software isn't about ticking boxes on a feature list. To find the best fit, you have to look past the marketing hype and see how these tools actually perform when things get messy. It's one thing for an API to transcribe a pristine, single-speaker audio file—it's another thing entirely to make sense of a podcast with hosts talking over each other or a customer service call with a dog barking in the background.

This is exactly why a detailed, side-by-side analysis is so important. We're going to evaluate the leading platforms on what truly matters: raw accuracy in less-than-ideal conditions, the quality of their speaker identification, and what it really costs to get a transcript you can actually use.

The ultimate measure of any speech-to-text tool is its accuracy. But even that can be a deceptive number. A 98% accuracy rate sounds great, but if the 2% of errors are all your critical keywords, product names, or technical terms, the transcript is practically worthless.

Let’s put a few platforms to the test in a challenging, real-world scenario: transcribing a 30-minute podcast episode. The recording features two hosts with different accents and a remote guest who has a slight audio lag.

Google Speech-to-Text: As you'd expect from an industry giant, Google's models are seriously robust. It handles the hosts' clear audio with ease. However, it sometimes gets tripped up by the remote guest, occasionally confusing their voice with background static and merging their sentences into one of the hosts' lines.

OpenAI Whisper: The open-source powerhouse, Whisper, shows off its incredible accuracy, even with accents, and nails nearly all the technical jargon discussed. Its weak spot can be speaker diarization, where it might label a long monologue from one host as coming from two different people.

Lemonfox.ai: Built from the ground up for high performance without the high cost, Lemonfox.ai really shines here. It doesn't just capture the technical terms with impressive fidelity; it excels at speaker diarization, correctly attributing who said what even when the hosts briefly talk over each other. This is where its specialized models give it a clear edge.

Key Differentiator: While most top-tier platforms handle clean audio without breaking a sweat, the real test is messy, complex audio. A solution like Lemonfox.ai that maintains high accuracy and reliable speaker identification under these conditions delivers a transcript that needs far less manual cleanup, saving you both time and money.

Speaker diarization—knowing who spoke and when—is a make-or-break feature for a huge number of use cases. Without clear speaker labels, a transcript is just a wall of text. It's useless for analyzing customer calls, drafting meeting minutes, or editing video interviews.

Think about processing a customer support call to review an agent's performance. If you can't perfectly distinguish the agent's words from the customer's, the entire analysis is flawed. This is why the quality of this feature is such a critical point of comparison.

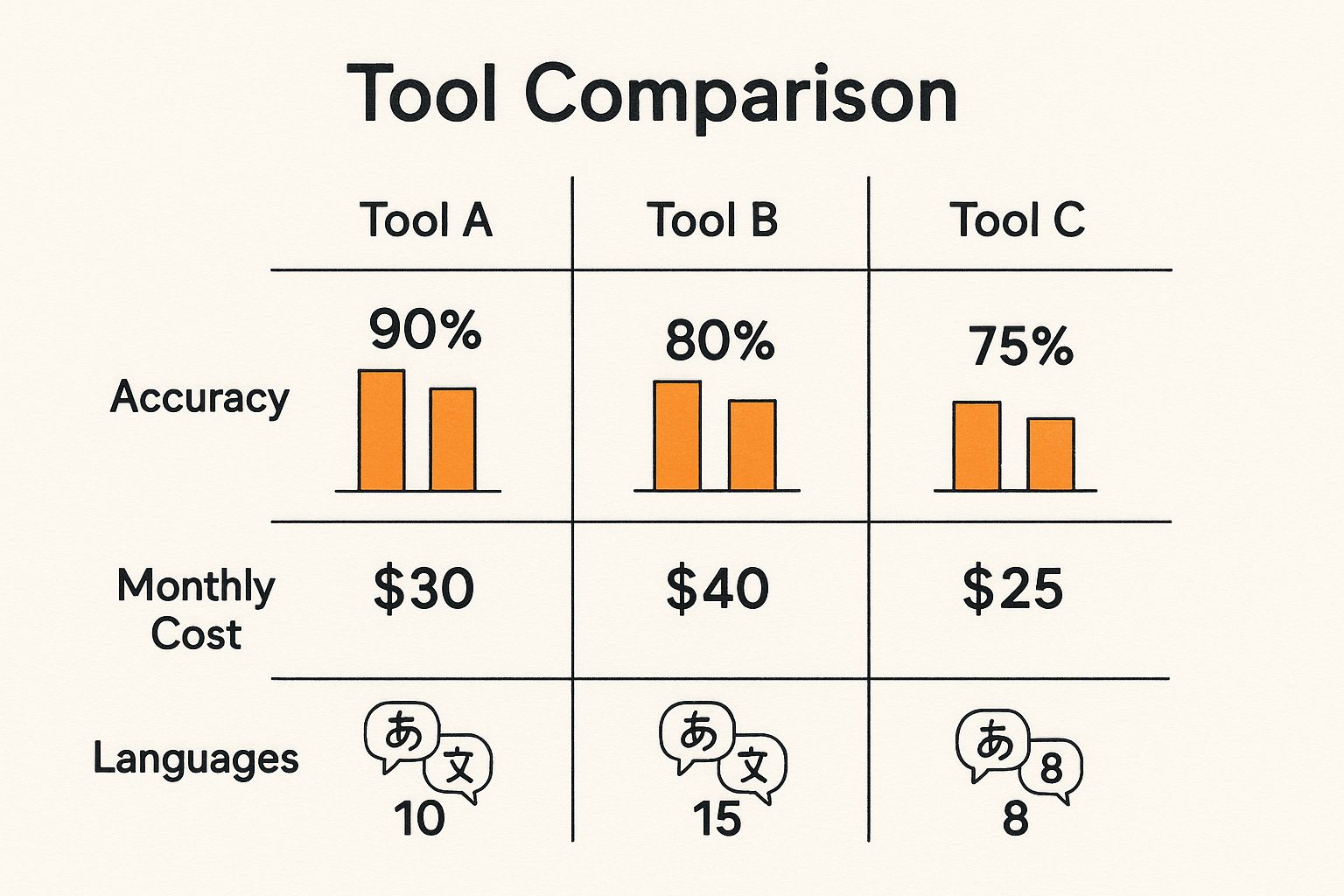

The infographic below gives a great visual summary of how the top tools stack up on key metrics like accuracy, cost, and language support.

This kind of breakdown quickly shows you the trade-offs. You might see one service offering more languages but at a higher price, while another prioritizes sheer accuracy above all else.

Beyond core accuracy, the best speech-to-text software offers a whole suite of features designed for specific jobs, from real-time transcription for live events to custom vocabularies for industry jargon. The pricing is just as varied, with models ranging from simple pay-as-you-go to complicated, tiered enterprise plans.

To cut through the noise, a structured comparison is the best way to see how things really stack up.

Here’s a clear breakdown of how the top providers perform on the metrics that count—accuracy, key features, and cost. This table helps visualize the value proposition each one offers.

| Provider | Accuracy on Clean Audio | Accuracy on Noisy Audio | Speaker Diarization Quality | Real-Time Transcription | Cost Per Audio Hour |

|---|---|---|---|---|---|

| Lemonfox.ai | 98%+ | High | Excellent | Yes | <$0.17 |

| Google STT | 97%+ | Good | Good | Yes | ~$1.44 (Standard) |

| OpenAI Whisper | 98%+ | High | Varies (Model Dependent) | No (Natively) | Free (Self-Hosted) |

This simple table reveals the crucial dynamics at play. While Whisper is technically "free," that doesn't account for the significant engineering time and server costs needed to set it up and keep it running. Its total cost of ownership is much higher than zero. Google offers a powerful, scalable solution, but you definitely pay a premium for it.

This is where Lemonfox.ai hits a sweet spot. It delivers accuracy that goes head-to-head with the best models out there but at a tiny fraction of the cost. For businesses and developers who need a straightforward, high-performance API without the enterprise price tag, that's a massive advantage. At scale, the difference is staggering: transcribing 1,000 hours of audio would cost less than $170 with Lemonfox.ai compared to $1,440 with Google's standard model.

So, which platform is actually right for you? It all comes down to your specific needs. When looking at different options, it can be helpful to review comparisons for specific use cases, like these top voicemail to text apps, to see how features perform in a focused application.

Based on our analysis, here are some clear recommendations for different scenarios:

For Media Production & Podcasting: You need flawless accuracy and speaker diarization. Period. A tool that can handle multiple speakers, background noise, and niche terminology is a must. Lemonfox.ai is the clear winner here because of its excellent diarization and high accuracy on complex audio, which drastically cuts down on manual editing.

For Large-Scale Enterprise Integration: If your company is already deep in the Google Cloud ecosystem and needs a single solution for dozens of languages across various internal apps, Google Speech-to-Text is a logical, if pricey, choice. Its scalability and deep integration are hard to beat.

For Researchers and AI Developers: If you have the technical chops to run your own infrastructure and need a powerful, customizable model for academic or experimental work, OpenAI's Whisper is an unbeatable open-source option. You get state-of-the-art accuracy with no direct licensing fees, as long as you can handle the operational side.

Ultimately, the best choice is the one that aligns with your technical requirements, your use case, and your budget. By looking past the marketing and testing these tools in realistic situations, you can make a smart decision that delivers real value.

It’s one thing to talk about transcription accuracy in percentages, but it’s another to see how that technology actually changes the way people work. The real value of these tools comes alive when they’re applied to specific, everyday business problems. From a bustling newsroom to a quiet doctor's office, accurate transcription is no longer a "nice-to-have"—it's a core part of the modern workflow.

The market reflects this shift. Valued at $3.81 billion in 2024, the global speech-to-text API market is expected to surge to $8.57 billion by 2030. This isn't just hype; it's driven by practical adoption in fields like media, healthcare, and customer service, where turning conversations into usable data creates a serious competitive edge. You can dig into the numbers yourself in the full market analysis from Grand View Research.

If you've ever worked in media, you know that time is everything. Manually transcribing a one-hour interview can easily eat up 4-6 hours of your day. That’s a soul-crushing task that grinds creativity to a halt. This is where a top-tier speech-to-text service completely changes the workflow.

Think about a documentary editor sifting through hours of interviews to find one perfect soundbite. Instead of endlessly scrubbing through audio, they can just search a transcript for a keyword. With precise timestamps and speaker labels, they can instantly find who said what and jump directly to that moment in their edit. This isn't just about saving time; it's about making the creative process feel fluid and intuitive.

Here’s what that looks like in practice:

For these kinds of high-stakes media projects, you can't afford mistakes. This is where a solution like Lemonfox.ai really shines. Its accuracy and ability to nail speaker identification mean the transcript is almost ready to go right out of the box, cutting down the clean-up work that bogs down so many other tools.

In a medical setting, accuracy is non-negotiable. It’s not just about getting the words right; it's about patient safety and legal compliance. Doctors spend a huge chunk of their time on administrative work, especially updating patient records. Dictation software lets them capture notes in their own words, right after seeing a patient.

This immediate transcription is crucial for keeping electronic health records (EHR) detailed and up-to-date. An accurate record ensures that diagnoses, treatments, and patient histories are captured correctly, which is vital for providing consistent care. A tool that fumbles complex medical terms isn't just unhelpful—it's dangerous.

Situational Recommendation: For anyone in healthcare, the best speech-to-text software has to be fluent in medical jargon. A generic tool will stumble over clinical terms, but a system with a more sophisticated model ensures every note is precise. This protects both the patient and the provider.

Every call center is sitting on a mountain of data, but most of it is trapped in audio recordings. Transcribing customer calls unlocks that data and turns it into a powerful tool for improving the business. Once those thousands of conversations are converted into searchable text, companies can finally start to:

A simple dictation app won't cut it here. You need a powerful API that can handle huge volumes of audio, reliably tell the difference between the agent and the customer, and maintain accuracy even when there's background noise. For simple internal memos, a basic tool might be fine, but for serious, data-driven analysis, you need an engine built for the job.

When you're shopping for speech-to-text software, it’s so easy to get fixated on that price-per-hour number. But here’s the thing I’ve learned from years in this space: that number is just one small piece of a much larger puzzle. To really understand what you'll be spending, you need to look at the total cost of ownership (TCO). That's where you'll find the true return on your investment.

Believe it or not, the most expensive part of transcription often isn't the software. It's the human labor you have to pay to fix its mistakes. A "cheaper" service that spits out inaccurate transcripts can become a massive time and money sink, forcing your team to spend hours cleaning up the mess. That hidden expense is where the real cost lives.

Most transcription services fall into one of three pricing buckets. Each has its pros and cons, and knowing the difference is the first step toward making a smart call.

The trick is to match the model to your actual workflow. A small team transcribing 20 hours of audio a month has a completely different set of financial needs than an enterprise churning through thousands of hours.

The Accuracy Equation: A service with 98% accuracy isn't just a little better than one with 90% accuracy—it’s a world apart. That lower-accuracy transcript demands exponentially more time to review and edit, quickly turning a small price difference into a major operational headache. Investing in better accuracy upfront always pays off.

Let's run the numbers on two real-world scenarios. Imagine a startup processing 100 hours of audio each month, and we'll value a team member's time at $25 per hour.

Scenario 1: The "Cheaper" Low-Accuracy Service

Scenario 2: A High-Accuracy Solution like Lemonfox.ai

The math doesn't lie. The "cheaper" option ends up being more than 10 times more expensive once you account for the human cost of cleaning it up. Opting for a high-accuracy, efficient solution like Lemonfox.ai doesn't just save you a few bucks on the subscription; it saves you hundreds on labor and delivers a far better return.

When you get right down to it, the "best" speech-to-text software really boils down to what you need it to do. There's no one-size-fits-all answer, but after digging into the details, some clear frontrunners emerge for specific jobs.

If you’re a podcaster, a media producer, or anyone who simply can't compromise on transcript quality, Lemonfox.ai is tough to beat. Its knack for handling tricky audio and accurately identifying different speakers means you’ll spend far less time on tedious manual edits. For developers looking for a powerful API that won't break the bank, Lemonfox.ai also offers a compelling balance of performance and price.

On the other hand, if you're a large organization already heavily invested in the Google Cloud platform, sticking with Google Speech-to-Text makes sense for its seamless integration and massive scale, even if it comes with a higher price tag.

This space is moving incredibly fast, and we're already seeing transcription evolve into something much more. The market reflects this explosion, projected to grow from USD 10.46 billion in 2018 to USD 31.8 billion by 2025. This isn't just hype; it's driven by smarter AI weaving itself into the tools we use every day. You can get a deeper look at the trends fueling this market growth.

What’s just around the corner? Imagine tools that can do more than just transcribe. We're on the verge of real-time emotional analysis that can sense a customer's frustration on a support call. Think of transcripts that automatically create action items in your project management software right after a meeting ends.

The Future is Contextual: The next generation of speech-to-text tools won't just hear words; they'll understand what they mean. They'll know the difference between a brainstorming idea and a final decision, summarize key takeaways like a human assistant, and genuinely make our workflows smarter.

Choosing the right tool today is about solving an immediate problem, and for high-stakes accuracy, Lemonfox.ai is a fantastic solution. But keeping an eye on these future developments ensures that whatever you choose, it’s ready not just for today, but for what’s coming next.

When you're trying to pick the right speech-to-text tool, you'll naturally run into some practical questions about how these services actually perform in the wild. Getting straight answers is key to choosing a tool that genuinely fits your needs and doesn't leave you with a mountain of edits.

You’ll see a lot of services advertising 95% accuracy, but that number usually comes from tests using pristine, studio-quality audio. For the messy reality of most business use cases—think team meetings with background chatter or calls with variable audio quality—anything that consistently stays above 90% is a more realistic and solid benchmark.

The truly top-tier services, however, can push that to 98% or higher, even when the audio isn't perfect. That seemingly small percentage jump makes a massive difference in how much time you save on manual corrections.

This is where the rubber really meets the road, and performance can vary wildly from one platform to another. It all comes down to the data the AI was trained on.

The best platforms have been fed massive, diverse datasets covering a huge range of dialects and accents, making them far more reliable. Still, very specific regional accents can sometimes trip up even the most sophisticated models if they weren't well-represented in the training data.

Absolutely. This feature is known as speaker diarization (or speaker labeling). It’s what automatically figures out who is speaking and when, creating a transcript that's easy to follow.

For anything involving more than one person—interviews, podcasts, focus groups, or meetings—this is a non-negotiable feature. The quality of the diarization is a major selling point, with the best tools providing incredibly precise speaker separation that saves a ton of manual tagging work.

Think of it this way: an API (Application Programming Interface) is a toolkit for developers. It lets them build transcription features directly into their own applications, websites, or internal systems. It requires coding to use.

An app, on the other hand, is a finished product for an end-user. It’s a program you can download and use right away—like a mobile voice recorder or a desktop transcription tool—with no technical setup required.

Ready to see what transcription that actually works feels like? Lemonfox.ai delivers world-class accuracy, dependable speaker identification, and a refreshingly simple API—all for under $0.17 per hour. Start your free trial today and get 30 hours on us.