First month for free!

Get started

Published 12/4/2025

So, what exactly is a voice AI platform? Think of it less like a single tool and more like a complete toolkit for building apps that can listen, understand, and talk back. It’s the essential bridge connecting human speech to your software, letting you craft experiences that feel natural and intuitive.

Instead of building everything from scratch, these platforms bundle all the core technologies—like speech recognition and voice synthesis—into easy-to-use APIs.

At its heart, a voice AI platform is an integrated system designed to process and interpret human speech. The best analogy is a workshop. A carpenter can’t build a table with just a saw; they need a hammer, a measuring tape, and sandpaper, too. In the same way, a developer needs a suite of specialized tools to create a voice-powered application, and these platforms put everything you need right at your fingertips.

This kind of technology is quickly moving from a "nice-to-have" novelty to a core part of modern software. The market numbers tell the same story. The Voice AI Agents market is projected to skyrocket from USD 2.4 billion to an estimated USD 47.5 billion in the next decade. More telling is that integrated voice AI platforms already hold over 76.4% of that market share, which shows a massive preference for all-in-one solutions. You can dig into the specifics in this detailed market analysis from Market.us.

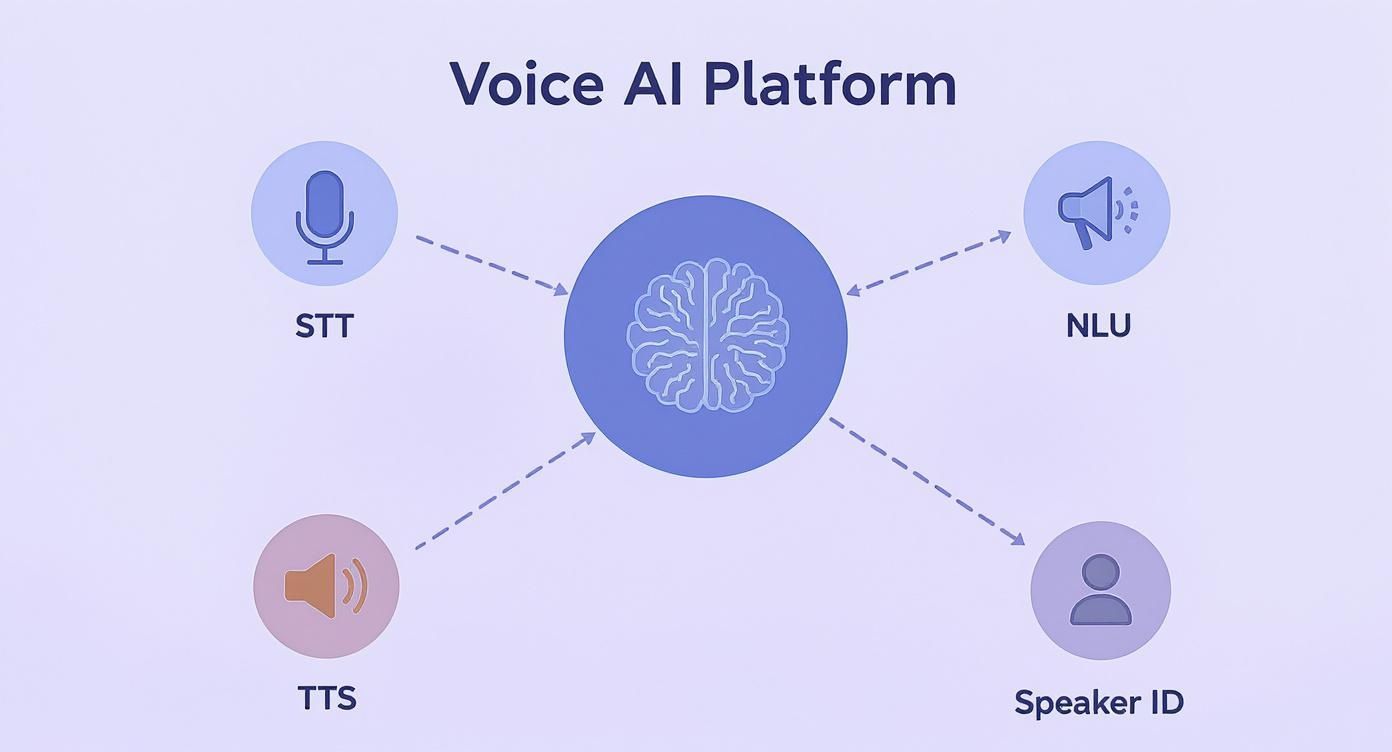

To really get what these platforms are all about, it helps to look under the hood at their four main components. Each piece has a specific, critical job to do in turning spoken words into digital action and back again.

It's helpful to think of these components as a team working together. Here's a quick breakdown of who does what.

Core Components of a Voice AI Platform

| Technology | Primary Function | Analogy |

|---|---|---|

| Speech-to-Text (STT) | Converts spoken audio into written text. | The Ears |

| Text-to-Speech (TTS) | Synthesizes written text into audible speech. | The Mouth |

| Natural Language Understanding (NLU) | Analyzes text to figure out intent and meaning. | The Brain |

| Speaker Recognition | Identifies or verifies a person by their voice. | The Vocal Fingerprint |

Together, these four pillars create a complete conversational loop. The platform can listen to what a user says, understand what they mean, trigger an action, and respond with a natural-sounding voice.

Key Takeaway: A voice AI platform isn’t just one thing; it's a collection of interconnected services working in concert. This tight integration is what makes it possible for developers to build sophisticated voice experiences without needing a Ph.D. in machine learning.

Platforms like Lemonfox.ai wrap these powerful capabilities into simple APIs, bringing advanced voice features within reach for any project. By offering STT, TTS, and speaker recognition, they give you the foundational elements needed to build truly dynamic, voice-driven interactions. This lets you focus on what really matters—creating an amazing user experience—instead of getting bogged down in building the AI infrastructure from the ground up.

To really get a handle on building great voice experiences, you need to look behind the curtain. A voice AI platform isn't just one single piece of magic. It's actually a system built on four distinct, but tightly connected, technologies that work in concert to make a conversation flow.

Think of it like a high-speed relay race. Each pillar has a very specific job and seamlessly passes the baton to the next. This perfectly coordinated hand-off is what makes talking to a machine feel so surprisingly natural.

This diagram shows how these key components fit together.

You can see that each technology—hearing, understanding, speaking, and identifying—handles one part of the job, but they all connect to a central hub to create the full experience.

The whole process kicks off with Speech-to-Text (STT), which you'll also hear called automatic speech recognition (ASR). Its only job is to listen to a stream of audio and turn those spoken words into written text. It’s essentially a super-fast, hyper-accurate court reporter for your application.

For example, when you ask, "Hey Google, what's the weather like in Berlin?" the STT engine is the first to jump into action. It captures the sound waves from your voice and transcribes them into the text string: "what's the weather like in Berlin". This text is the raw input for the next stage. Getting this part right is critical; even one wrong word can send the entire interaction off course.

Once the words are in text form, Natural Language Understanding (NLU) takes over. This is the real brain of the operation. NLU’s job isn’t just to read the words, but to figure out the intent behind them. It's the difference between knowing what was said and understanding what was meant.

So, the NLU engine takes the text "what's the weather like in Berlin" and breaks it down. It quickly identifies the core intent (get weather) and pulls out the key piece of information, or entity (location: Berlin). This creates structured data that your application can actually use. NLU is what turns a simple sentence into a clear command a machine can execute.

The Power of Intent: Without NLU, your app just sees a jumble of words. By identifying the user's goal, NLU lets your software trigger the right action—whether that's playing a song, setting an alarm, or fetching data from a database.

After your application has processed the command and found the answer—for instance, "It's 18 degrees and sunny in Berlin"—it needs a way to talk back to the user. That’s where Text-to-Speech (TTS) comes in. A TTS engine, sometimes called a voice synthesizer, takes written text and turns it into natural-sounding, audible speech.

It helps to think of TTS as a talented voice actor who can switch between different languages, accents, and tones instantly. The best modern TTS systems are incredible, producing speech that’s nearly impossible to distinguish from a real person, complete with lifelike intonation. This is what makes the experience feel engaging, not clunky and robotic.

The final piece of the puzzle, Speaker Recognition, adds a powerful layer of security and personalization. This tech analyzes the unique qualities of a person's voice—things like pitch, tone, and cadence—to figure out exactly who is speaking. It works just like a vocal fingerprint.

This comes in handy in two primary ways:

A great example is a banking app that uses your voice to confirm it's you before giving you access to your account—a secure, hands-free way to log in. Platforms like Lemonfox.ai offer this feature right alongside STT and TTS, giving developers the tools to build far more sophisticated and secure voice applications.

It's one thing to talk about the tech behind voice AI, but where the rubber really meets the road is in how it’s solving real-world problems. This isn't just futuristic stuff anymore; it's actively reshaping how businesses work, cutting down on grunt work, and making life easier for both customers and employees.

From the doctor's office to your bank's call center, voice AI is stepping in to handle repetitive tasks, secure sensitive data, and offer support on a massive scale. The momentum is huge. The global conversational AI market, which is built on these core voice technologies, was valued at USD 12.24 billion and is expected to hit USD 61.69 billion by 2032, growing at a steady clip of 22.6%. That kind of growth tells you this is a major shift, not a passing trend. You can dig into more of the market data on Fortune Business Insights.

Let’s look at a few powerful examples of where voice AI platforms are making a real difference right now.

Ask any doctor what one of their biggest headaches is, and they’ll probably say "paperwork." Clinicians are drowning in administrative tasks, spending hours typing up patient notes. It’s a fast track to burnout and pulls them away from actual patient care.

Voice AI is a direct solution to this. By plugging high-accuracy Speech-to-Text (STT) into electronic health record (EHR) systems, doctors can simply dictate their notes as they go, either during an appointment or right after. The platform instantly transcribes their words, even nailing complex medical terms.

The impact is immediate and measurable:

We've all been there: stuck on hold, waiting to ask a simple question. Traditional call centers can be slow and frustrating. Voice AI platforms are completely changing this game with intelligent voice bots that can handle a flood of customer calls instantly.

These aren't the clunky, robotic phone trees of the past. Using Natural Language Understanding (NLU), modern voice bots figure out what a caller actually wants. They can check an order status, process a payment, or answer product questions 24/7, often without needing a human at all. And if things get too complex, the bot hands the call off to the right agent with the full conversation history, so the customer doesn't have to repeat themselves.

A Win for Efficiency: Automating routine calls slashes wait times and frees up human agents to tackle the really tricky issues. It's a double win: customers are happier, and the call center runs more smoothly and cost-effectively.

In finance, security is everything. But passwords and PINs are notoriously easy to steal. This is where Speaker Recognition comes in, offering a much more robust, next-gen solution.

Many banks are now using voice biometrics to verify who you are when you call. The system listens to your unique vocal patterns—your "vocal fingerprint"—to confirm your identity in seconds. It's way faster and a whole lot safer than grilling you with a list of security questions.

This approach delivers two key benefits:

These are just a few examples. As voice AI platforms like Lemonfox.ai continue to make these powerful tools more affordable and easier to use, developers are dreaming up new ways to weave voice into pretty much every application you can imagine.

https://www.youtube.com/embed/lvS-im-KgRU

With so many voice AI providers out there, picking the right one can feel overwhelming. But this decision is critical—it directly shapes your app's performance, how it scales, and ultimately, how users feel about it. The best way to cut through the noise is to have a clear, structured way to evaluate your options.

Think of it like choosing an engine for a car you're building. You wouldn't put a massive, gas-guzzling racing engine in a small city car, right? The goal is to find the perfect fit. The best voice AI platform is the one that lines up perfectly with your project's needs for performance, budget, and global reach.

Let's break this down into five key areas. By looking closely at each one, you can pick a partner that truly supports your technical needs and business goals.

First things first: how well does the AI actually understand what people are saying? Accuracy is the absolute bedrock of any voice-powered feature. It's often measured by a metric called Word Error Rate (WER), but the real test is in how it performs in the wild.

If your speech-to-text engine consistently gets things wrong, the whole user experience crumbles. People get frustrated, and they leave.

A single accuracy percentage on a pricing page doesn't tell you the full story. Performance can change dramatically depending on background noise, different accents, or specialized industry jargon.

You should be asking vendors questions like:

Latency is all about speed—it’s the time it takes for the platform to process audio and send a result back. For any real-time conversation, like a support bot or a voice assistant, low latency is everything. Even a tiny delay can make an interaction feel clunky and unnatural.

Imagine talking to someone who always takes a few seconds to reply. The conversation would feel awkward and stilted. Your app is no different. You're aiming for an interaction that feels as smooth and immediate as a real human conversation.

The Real-Time Test: For applications that need a natural back-and-forth dialogue, you should aim for a latency of under 300 milliseconds. This is generally the point where delays become noticeable and disrupt the flow.

Your app’s potential market is directly tied to the languages your voice AI platform can handle. If you want to reach a global audience, you need a partner with solid language coverage for both Speech-to-Text (STT) and Text-to-Speech (TTS).

But don't just count the flags on their website. Dig a little deeper. Some platforms might claim to support a hundred languages, but only offer advanced features like speaker recognition or punctuation for a handful of them. This is where a platform like Lemonfox.ai really shines, offering robust, full-featured support for over 100 languages so you can build for a diverse, international user base from day one.

Voice AI pricing can be confusing. You’ll see everything from per-minute billing to complex tiered subscriptions. A low sticker price can sometimes hide extra fees for essential features or high overage charges that sneak up on you as your usage grows.

The goal is to find a pricing model that's both affordable and predictable. Look for clear, usage-based pricing that scales with your growth without any nasty financial surprises. Solutions like Lemonfox.ai are built to be incredibly cost-effective, offering high-quality transcription for less than $0.17 per hour. This makes powerful voice AI accessible for everyone, from startups to enterprise teams.

Finally, you absolutely have to know where your users' data is going and how it's being handled. This isn't just a "nice-to-have"—it's a legal necessity, especially if you have users in places with strict data laws like the EU's General Data Protection Regulation (GDPR).

Voice data is sensitive, so you need a platform that takes security and privacy seriously. Find out about their data retention policies. Do they store your audio, and if so, for how long and why? A huge plus is working with a provider that deletes your data right after processing. Even better, having the option to use an EU-based API, as offered by Lemonfox.ai, is a massive advantage for ensuring GDPR compliance and earning the trust of your European customers.

Choosing a provider is a big decision, but it doesn't have to be a shot in the dark. This checklist is designed to help you methodically compare your options and find the perfect match for your project's specific needs.

| Evaluation Criterion | Key Questions to Ask | Why It Matters |

|---|---|---|

| Accuracy (WER) | How does the model perform with noisy audio, accents, and industry-specific terms? Can it be fine-tuned? | High accuracy is essential for a good user experience. If the AI can't understand the user, the application fails. |

| Latency | What is the average end-to-end response time? Is it under 300ms for real-time use cases? | Low latency makes conversations feel natural and responsive. High latency creates frustrating, awkward interactions. |

| Language Support | How many languages are supported for STT and TTS? Are advanced features (e.g., speaker recognition) available for all of them? | Your choice determines your global reach. Ensure the platform supports the languages and features your target markets need. |

| Cost & Pricing | Is the pricing model transparent and predictable? Are there hidden fees for features or overages? | A clear, scalable pricing model prevents budget surprises and ensures the solution is sustainable as you grow. |

| Data Privacy & Region | Where is data processed and stored? Do you offer regional endpoints (e.g., EU)? What is the data retention policy? | This is critical for legal compliance (like GDPR) and building user trust, especially when handling sensitive voice data. |

By walking through these questions, you can move beyond marketing claims and evaluate platforms based on what truly matters for building a successful, reliable, and secure voice-powered application.

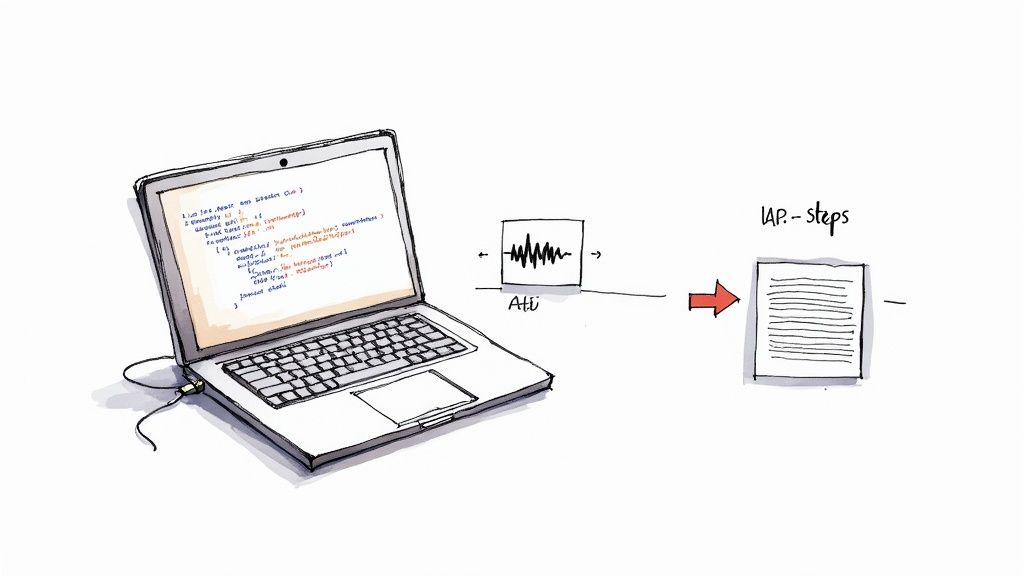

Alright, let's move from theory to practice. You'd be surprised how straightforward it is to plug a voice AI platform into your project, especially with the developer-friendly APIs available today. We'll walk through the whole workflow right now, using Lemonfox.ai as our example to show you how fast you can get a proof-of-concept off the ground.

The goal here isn't to get lost in the weeds. It's to show you the direct path: securing your API key, installing the right tools, and writing a tiny bit of code to transcribe your first audio file. Seriously, you can go from zero to a working transcription in just a few minutes.

This is what it looks like in practice—a simple, clean process to turn raw audio into structured data your app can actually use.

As you can see, we're talking about just a few lines of code to get things rolling. That's the beauty of modern voice AI platforms.

First thing's first: you need an API key. Think of it as a secure password that proves your application is allowed to talk to the voice AI service. It authenticates every request you make and makes sure no one else is using your account.

Getting one from Lemonfox.ai is dead simple:

A Quick Security Tip: Treat your API key like you would any password. Never, ever hardcode it into your front-end code (like a public website's JavaScript). The best practice is to store it as an environment variable on your server. This keeps it out of sight and prevents anyone from snatching it.

Next up is the Software Development Kit (SDK). This is a huge time-saver. An SDK is basically a pre-built library that handles all the messy, low-level stuff involved in connecting to an API. Instead of writing that boilerplate yourself, you get to use simple, clean functions in your favorite programming language.

If you're working in a Python project, installing the Lemonfox.ai SDK is just a single command in your terminal:

pip install lemonfox

For the JavaScript folks using Node.js, it's just as easy:

npm install lemonfox

And that's it. Your development environment is now fully equipped to start making calls to the API.

Now for the fun part. With your API key secured and the SDK installed, you can write the code to transcribe an audio file.

Let's look at a basic Python example. This little snippet will initialize the client with your key and then transcribe a local audio file named meeting_audio.mp3.

from lemonfox import Lemonfox

client = Lemonfox(api_key="YOUR_API_KEY")

with open("meeting_audio.mp3", "rb") as audio_file:

# Call the transcribe method and just pass in the file

transcription = client.speech.transcriptions.create(

file=audio_file,

)

print(transcription.text)

See how clean that is? You just create a client object, open your file in binary read mode ("rb"), and call the create method. The platform does all the heavy lifting in the background, and what you get back is the clean, transcribed text.

This really shows that adding powerful voice features to your application isn't some monumental engineering challenge anymore. It's something any developer can tackle in an afternoon.

Picking the right voice AI platform isn't just a technical choice—it's a strategic one that shapes your app's performance, your budget, and how fast you can scale. We've walked through the core tech and what to look for, so let's tie it all together and see where Lemonfox.ai fits in.

The market for voice AI is exploding. We're talking about a global market projected to hit around USD 10.05 billion, with companies like a single banking firm dropping USD 5 million on multilingual voice bots. This massive growth, highlighted in enterprise spending trends from Gnani.ai, shows just how critical it is to have powerful and accessible tools.

Let's be honest: many of the big-name voice AI platforms come with price tags that can make a startup founder's eyes water. We built Lemonfox.ai specifically to bridge that gap, giving developers access to top-tier performance without the financial headache.

Our pricing is straightforward and built to grow with you. You can transcribe audio for less than $0.17 per hour. That kind of affordability means you can bake high-quality STT and TTS into your project from the start without sweating over a massive bill at the end of the month.

The goal is simple: make premium voice technology available to everyone. By blending low cost with high accuracy, we make sure you never have to sacrifice quality for the sake of your budget.

Modern applications need to speak everyone's language. That's why we offer robust support for over 100 languages in both our Speech-to-Text and Text-to-Speech APIs. You get the tools to build for a worldwide audience from day one.

We also bundled in advanced features like speaker recognition as a standard part of our service. This lets you build more sophisticated applications—think personalized user experiences or even vocal biometric security—without having to stitch together multiple services or pay extra fees.

And for anyone building for the European market, our optional EU-based data processing makes GDPR compliance a non-issue. Plus, we're serious about privacy; we delete all your data immediately after it’s processed, giving you and your users total peace of mind.

Ready to see what you can build? Give a powerful, affordable, and privacy-focused voice AI a try. Start your free trial with Lemonfox.ai and get 30 hours of transcription on us.

As you start digging into voice AI, a few practical questions almost always pop up. It's one thing to understand the concepts, but it's another thing entirely to start plugging an API into your own application. It can feel like a big leap, but it doesn't have to be.

Let's walk through some of the most common questions developers have about accuracy, scalability, and what it really takes to get started. Think of this as the practical FAQ for building with voice.

You'll see a lot of platforms bragging about their Word Error Rate (WER). In simple terms, WER just counts the mistakes—words added, missed, or swapped—and gives you a percentage. A lower WER is obviously better, but that single number can be misleading.

Here's the reality: context is everything. An STT model that scores perfectly on pristine, studio-quality audio might completely fall apart in a noisy call center or on a choppy phone call. This is why you absolutely have to test any platform with audio that looks and sounds like your real-world use case. The best platforms offer models specifically tuned for different environments, like telephony, because they know that’s where performance truly matters.

Honestly, getting started is often easier than most developers think. But there are two classic bumps in the road. The first is dealing with real-time audio streaming. Transcribing a live conversation means you’re juggling audio chunks and wrestling with latency, which is a different beast than just uploading a static file.

The second common headache is cost management. Pricing models can be confusing, and an unexpected surge in usage can leave you with a shocking bill at the end of the month.

A predictable, usage-based pricing model is a developer’s best friend. It takes away the financial guesswork and lets you scale your app without worrying that your success is going to sink your budget.

Yes, and this is a game-changer. Off-the-shelf voice AI models are trained on huge, general datasets. They're powerful, but they probably don't know your company's product names, industry-specific jargon, or the unique acronyms your team uses.

That's where fine-tuning (sometimes called custom vocabulary) comes in. Top-tier platforms let you feed the model a list of your specific terms. The AI then learns to recognize these words, which can drastically boost accuracy for your particular domain. For any serious application in fields like medicine, finance, or tech where getting it right is non-negotiable, this kind of customization is essential.

Ready to build with a platform that gets these challenges? Lemonfox.ai offers high-accuracy models built for real-world audio, straightforward pricing, and the tools you need to succeed. Start your free trial today and get 30 hours of transcription on us.