First month for free!

Get started

Published 10/24/2025

Voice recognition isn't just about turning spoken words into text. It's about teaching machines to listen and understand, turning our speech into direct actions. Think about the last time you asked a smart speaker for the weather or dictated a text message while driving—that's this technology in action, quietly working in the background of our lives.

What was once science fiction has quickly become a normal part of our routine. Thanks to huge strides in AI, deep learning, and natural language processing, talking to our devices feels less like a gimmick and more like a conversation.

We see it everywhere:

It's simple, really. Speaking is almost always faster and easier than typing, which is why people have embraced it so enthusiastically. This has created a huge opportunity for developers and businesses to build more intuitive and accessible experiences.

The market numbers back this up. The global speech and voice recognition market hit USD 8.49 billion in 2024 and is expected to soar to USD 23.11 billion by 2030. That’s a staggering 19.1% compound annual growth rate, as detailed in the latest market report on MarketsandMarkets.

When you make voice the interface, you're not just adding a feature; you're fundamentally changing how users interact with your product, boosting engagement and cutting down on manual effort.

So, what's behind this explosion? A few key things have fallen into place.

First, accuracy has gotten remarkably good. Modern neural networks can now understand speech with incredible precision, even in noisy environments. This reliability is what makes it possible for developers to build dependable transcription apps or virtual assistants that actually work.

Second, latency is no longer an issue. The delay between speaking a command and seeing a result has shrunk from several seconds to mere milliseconds. For things like real-time captions or in-car controls, that near-instant response is absolutely critical.

And finally, privacy is being taken seriously. As people grow more conscious of their data, providers have had to step up. For instance, platforms like Lemonfox.ai are built with a privacy-first approach, deleting user data immediately after processing. This helps build trust, especially for sensitive enterprise and consumer applications.

From retail checkouts to the dashboard of your car, voice recognition is making hands-free control the new standard.

Whether you're a developer building the next great voice-powered app or a business leader looking for an edge, understanding this technology is no longer optional. It's a powerful channel for connecting with your users in a more natural way.

This guide will walk you through exactly how it all works, how you can integrate it using a service like Lemonfox.ai, and what you need to consider around accuracy, latency, and privacy.

Let's dive in.

Ever wonder what really happens when you talk to your smart speaker or dictate a text message? It feels instant, almost like magic. But behind the curtain is a fascinating process that takes the physical vibrations of your voice and translates them into data a computer can understand.

The best way to think about it is to imagine a highly trained human translator. This translator doesn't just know two languages; they're fluent in both the language of sound waves and the digital language of machines.

It all starts with the microphone—the system’s "ears." When you speak, you create sound waves, which are just vibrations traveling through the air. These are analog signals, continuous and messy. Computers, however, only speak in ones and zeros, the clean, precise language of digital information.

That’s where the first step of the translation comes in. A tiny piece of hardware called an Analog-to-Digital Converter (ADC) samples your analog voice signal thousands of times per second, creating a digital snapshot. This digital representation is what the software can finally start to work with.

The journey from a sci-fi concept to a practical business tool has been a long one, but its impact is undeniable today.

This evolution highlights just how deeply integrated this technology has become, giving many businesses a real competitive edge.

To really get a grip on the process, it helps to break it down into four key stages. Each step builds on the last, turning raw sound into meaningful text.

| Stage | Description | Analogy (Human Translator) |

|---|---|---|

| 1. Signal Processing | The system cleans up the raw digital audio, removing background noise and isolating the user's voice. | Listening carefully in a loud room to focus only on what one person is saying. |

| 2. Acoustic Modeling | The cleaned audio is broken down into the smallest units of sound, called phonemes. | Identifying the individual sounds within a word, like "c-a-t," before knowing the word itself. |

| 3. Language Modeling | The sequence of phonemes is analyzed to determine the most likely words and sentences they form based on grammar and context. | Assembling the sounds into words that make sense together, like realizing "I scream" fits better than "ice cream" in a sentence about being scared. |

| 4. Text Output | The most probable sentence is chosen and presented to the user as the final text transcription. | Speaking or writing down the fully translated and grammatically correct sentence. |

Each of these stages is critical. Without a clean signal, the models struggle. Without accurate phoneme detection, the words are gibberish. And without context, the final sentence might be grammatically correct but completely wrong.

Let's dig a little deeper into the two most important modeling stages.

Once the audio is digitized and cleaned up, the acoustic model gets to work. Think of this model as a master phonetician. Its only job is to listen to the digital signal and break it down into its fundamental sounds, or phonemes.

For instance, the word "speak" is made up of four phonemes: "s," "p," "ee," and "k." The acoustic model has been trained on thousands of hours of spoken language, so it can recognize these sounds with impressive accuracy, no matter who is speaking—fast, slow, high-pitched, or with a heavy accent. It maps the audio to a sequence of probable phonemes, which it then hands off to the next part of the system.

At its core, the acoustic model isn't trying to understand words just yet. It's focused entirely on mapping the raw audio data to the basic sounds of a language, creating the first layer of interpretation.

With a string of phonemes ready, the language model takes center stage. If the acoustic model is the phonetician, the language model is the seasoned editor and grammar expert. It knows how language is supposed to work.

Its task is to look at the stream of phonemes and figure out the most probable words and sentences they could form. It doesn't just guess one word at a time; it considers the whole context.

Here’s a quick look at its thought process:

This elegant two-part system—breaking sound into phonemes and then building those phonemes into meaningful sentences—is the engine that drives all modern voice recognition. It's how a platform like Lemonfox.ai can turn messy human speech into structured, accurate data for developers and businesses.

The slick, seamless voice recognition we take for granted today didn't just pop into existence. Its story started way back in the mid-20th century, with clunky experiments that could barely understand a single person. Looking back reveals a long, slow climb from a niche curiosity to a tool that’s now part of our daily lives.

The first real attempt came out of Bell Laboratories in the 1950s. They built a system called "Audrey," a massive, power-guzzling machine that could recognize spoken digits from zero to nine. The catch? It really only worked for the voice of the guy who built it. It was a fascinating proof-of-concept, but a long way from being useful to anyone else.

For decades after Audrey, progress was slow. Early systems were painfully limited, often forcing you to pause... awkwardly... between... every... single... word. The real breakthrough didn't happen until the 1970s and 80s when researchers started applying statistical models, most importantly the Hidden Markov Models (HMMs).

Instead of trying to match sounds to a perfect, pre-recorded template, HMMs changed the game entirely. They allowed systems to calculate the probability that a sequence of sounds meant a particular word. This was huge. It meant the technology could finally start to handle the natural variations in how people actually talk—differences in pitch, speed, and accent.

This new statistical approach was the key that unlocked everything else.

The move to statistical models like HMMs was the turning point. It taught machines to think in terms of likelihood rather than absolute matches, which is a lot closer to how our own brains process language.

It was during this time that the market started to take notice. The global voice and speech recognition software market, valued at USD 10.46 billion back in 2018, was already on a trajectory to hit USD 31.8 billion by 2025. As this Grand View Research analysis shows, this growth marks the moment the tech went from a lab project to a serious business tool.

The most recent—and most dramatic—leap forward has been fueled by machine learning and deep neural networks. When these advanced AI models arrived on the scene around the 2010s, they quickly began to blow past the performance of the older HMMs.

Neural networks are loosely inspired by the structure of the human brain. They can chew through immense amounts of data—we’re talking thousands upon thousands of hours of spoken audio—to learn the incredibly subtle patterns and quirks of human speech all on their own. This is the magic behind the stunning accuracy of today's voice assistants like Siri, Alexa, and Google Assistant.

This journey, from a simple digit recognizer like Audrey to the sophisticated AI systems we have now, is what makes it possible for a platform like Lemonfox.ai to deliver incredibly accurate transcription in real-time. The technology has come an awfully long way, and it’s not slowing down anytime soon.

Voice recognition has come a long way from just asking a smart speaker for the weather forecast. It has quietly become part of our daily lives, solving real problems and making things work better, often in ways we don't even think about.

This isn't just about convenience anymore. It’s a serious tool driving real change in how businesses operate. Turning our spoken words into data that a computer can understand has opened the door to all sorts of automation and accessibility improvements.

You see it all the time in customer service. Instead of getting stuck in those frustrating "press 1 for sales, press 2 for support" phone menus, many companies now use voice-activated systems. You just say what you need, and the system gets you to the right person. It's a small change that saves everyone a lot of time and hassle.

Think about your car. Voice recognition is a huge safety feature. Being able to change a song, answer a call, or pull up directions without taking your hands off the wheel is a game-changer for reducing distractions on the road.

It’s making a big difference at work, too. For journalists, researchers, or anyone who sits through a lot of meetings, real-time transcription is a lifesaver. Instead of trying to scribble down every word, you can actually focus on the conversation, knowing you’ll have a perfect, searchable transcript later.

At its core, voice recognition is about reducing friction. It lets us interact with technology in a more natural, human way, which makes complicated tasks simpler and safer.

This rapid adoption is all thanks to huge leaps in artificial intelligence. The market for AI voice recognition was already valued at around USD 6.48 billion in 2024, and it's expected to rocket to USD 44.7 billion by 2034. That kind of growth shows just how deeply it's being integrated into major industries like healthcare and electronics. You can explore the full AI voice recognition market report to see just how fast this space is moving.

Nowhere is the impact more profound than in healthcare. Doctors and nurses are swamped with paperwork, spending a huge chunk of their day typing up notes and updating electronic health records (EHR).

Voice recognition technology gives them a way out. They can now dictate patient notes directly into the system, which not only saves an incredible amount of time but also leads to more accurate and detailed records. This frees them up to spend more time on what actually matters: taking care of patients.

This kind of seamless interaction is what we've all become used to with personal assistants on our phones.

These assistants are the friendly face of the powerful voice technology working behind the scenes in so many different areas.

And we're really just scratching the surface. As the technology gets better at understanding context and nuance, you’ll see it pop up in even more places. Think smart homes that know what you need before you ask, or educational tools that adapt to how you learn. Companies like Lemonfox.ai are putting these powerful tools into the hands of more developers, so expect to see a lot more innovation ahead.

Let's be honest: not all voice recognition technology is built the same. If you're looking to add speech-to-text into your product, picking the right system is one of the most important decisions you'll make. It directly shapes your user experience and how well your operations run. But with a sea of options out there, how do you actually know which one is right for you?

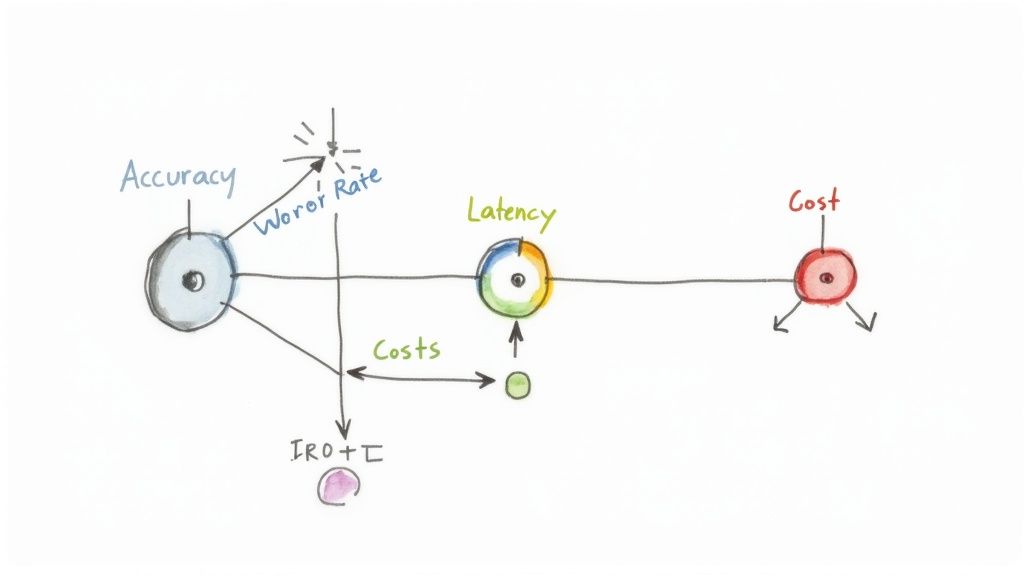

The key is to understand the delicate balance between three core performance metrics. Think of them as the three legs of a stool—if one is off, the whole thing wobbles. Nailing that balance is what separates a frustrating user experience from a seamless one.

These metrics—accuracy, latency, and cost—are always in a push-and-pull relationship. Tuning one up often means compromising on another. Getting a good handle on each one will give you the confidence to choose a service that truly fits your project's needs.

Accuracy is usually the first thing that comes to mind, and for good reason. If the system can't figure out what's being said, nothing else really matters. In the speech-to-text world, we typically measure accuracy by looking at its opposite: the Word Error Rate (WER).

WER is just a simple percentage of the words the system got wrong. A lower WER means a more accurate transcript. For instance, a system with a 5% WER is getting 95 out of every 100 words right. The best systems today can hit a WER below 5% under perfect conditions, but real-world factors can throw a wrench in the works:

While chasing a 0% WER is tempting, "good enough" accuracy really depends on the job. A minor mistake in a quick voice note is no big deal, but that same error in a medical transcription could have serious consequences.

Latency is all about speed. It’s the time it takes for the system to hear your speech, process it, and spit out the text. You see it as the delay between when you stop talking and when the words pop up on your screen. For some applications, this is just as critical as accuracy.

Imagine you're providing real-time captions for a live broadcast. If the latency is high, the captions will trail so far behind the speaker that they become completely useless. The same goes for any interactive voice command system—it needs a snappy, near-instant response to feel natural.

On the other hand, if you're just transcribing a recorded meeting to read later, latency is barely a concern. Who cares if it takes a few minutes to process the file, as long as the final transcript is spot-on? This is that classic trade-off in action: systems built for ultra-low latency often have to cut a few corners on accuracy to deliver results that fast.

To make a well-rounded decision, it's helpful to see these core metrics side-by-side. Each one tells a different part of the story about a system's performance. The table below breaks down what you need to know.

| Metric | What It Measures | Why It's Important |

|---|---|---|

| Accuracy (WER) | The percentage of words incorrectly transcribed. | Directly impacts the usability and reliability of the output. Crucial for critical applications. |

| Latency | The delay between speech input and transcription output. | Essential for real-time interactions like voice assistants or live captioning. |

| Cost | The price per unit of audio processed (e.g., per minute). | Determines the financial viability of a project, especially at scale. |

Ultimately, the "best" service isn't the one with the highest score on a single metric, but the one that offers the right combination for your specific use case and budget.

Finally, we have to talk about the bottom line: cost. Most voice recognition services charge based on the volume of audio you process, usually billed by the minute or hour. And prices can be all over the map, depending on the provider and the model's capabilities.

Naturally, the more advanced models that promise top-tier accuracy or handle really messy audio often come with a premium price tag. This brings up another critical question you have to answer: Is that tiny bump in accuracy from a big-name provider really worth the massive jump in cost for what you’re building?

For a lot of startups and indie developers, finding an affordable solution that doesn't skimp on quality is everything. That’s where services like Lemonfox.ai come in. They’re built to strike that balance, delivering excellent transcription quality at a cost that makes powerful voice tech accessible to more people.

As voice recognition becomes a bigger part of our daily routines, it inevitably raises some tough questions about privacy and ethics. The convenience of talking to our devices is fantastic, but it comes with a serious responsibility to handle our data with care and create systems that work for everyone, not just a select few.

Let's start with the biggest concern most people have: the idea of a device that's "always listening." It's a creepy thought, right? The fear that a private conversation could be recorded and sent off to some server without you ever knowing is completely valid. This is exactly why the wake word is so important.

Your phone or smart speaker isn't recording everything you say 24/7. Instead, it's designed to listen locally for one specific phrase, like "Hey Siri" or "Alexa." Think of it as dozing until it hears its name. Only after it catches that wake word does it "wake up" and start sending audio to the cloud to figure out what you want. It’s a design choice made specifically to address this very privacy issue.

Beyond just privacy, we have to talk about algorithmic bias. A voice recognition model is a direct reflection of the data it was trained on. So, if the training data is mostly from one group—say, native English speakers from a specific region—the AI will get really good at understanding them, but struggle with everyone else.

This creates a frustrating digital divide. The technology becomes less accurate and less useful for people with different accents, non-native speakers, or those with unique speech patterns. This can be a minor annoyance when your smart speaker mishears a song title, but it becomes a major problem when people can't access essential services that use voice commands for support or navigation.

Addressing bias isn't just about tweaking code; it's an ethical must. For voice recognition to be a truly helpful tool for humanity, it has to understand the rich diversity of human voices.

Thankfully, the industry is taking these challenges seriously. The only way forward is by earning user trust, which really boils down to two things: transparency and control.

Companies are getting better at being upfront about how they use voice data. Many now offer dashboards where you can see—and delete—your own voice recordings. This is a huge step, as it puts you back in the driver's seat of your own data.

Here are a few of the key strategies being put into practice:

By tackling these privacy and ethical issues head-on, we can build voice technology that people feel good about using. The ultimate goal isn't just to create powerful systems, but to create ones that are fair, inclusive, and respectful.

As you start working with voice recognition, you're bound to have some questions. Getting a handle on how these systems work, what they can do, and how they manage your data is the first step to using them effectively. Let's tackle some of the most common ones.

This is usually the first thing people ask, and it’s a great question. Modern systems have gotten incredibly good, often hitting over 95% accuracy under the right conditions. Think of a clean audio file, a quality microphone, and little to no background noise.

Of course, the real world is messy. A few things can throw a wrench in the works:

The good news is that ongoing improvements in AI and machine learning are constantly pushing that accuracy number up, making the tech more dependable in all sorts of environments.

The thought of an "always-on" microphone is a valid privacy concern, but it's mostly based on a misunderstanding of how these devices work. Your smart speaker isn't secretly recording every conversation you have.

Instead, they rely on a wake word—a specific trigger phrase like "Hey Siri" or "Alexa." The device is always listening for that one specific phrase using on-device processing, but it isn't sending any audio to the cloud. Only when it hears that wake word does it "wake up" and start streaming your request for processing. This is a deliberate design choice to protect your privacy from accidental eavesdropping.

Think of the wake word like a receptionist at a front desk. They hear all the chatter in the lobby, but they only pay attention and act when someone says their name.

You'll often hear these terms used as if they mean the same thing, but there’s a small but significant difference. Nailing this down helps you understand what the technology is actually doing.

Speech recognition is all about understanding what is said. The main goal is to convert spoken words into written text. This is the magic behind transcription services and the dictation feature on your phone. It doesn't care who is speaking, only what words they're using.

Voice recognition, on the other hand, is about identifying who is speaking. It works like a vocal fingerprint, analyzing the unique qualities of someone's voice—like pitch, tone, and cadence—to verify their identity. You'll find this in security systems or when your smart home device recognizes different family members to give them personalized answers. For what it's worth, most of what we've discussed in this guide falls under the umbrella of speech recognition.

Ready to bring fast, accurate, and affordable voice AI to your own application? Lemonfox.ai provides a powerful Speech-to-Text API designed for developers. See how our privacy-first approach and straightforward tools can help you build the next great voice-powered experience. Start your free trial and see for yourself.