First month for free!

Get started

Published 9/27/2025

Natural language processing, or NLP, is the field of artificial intelligence that teaches computers how to read, understand, interpret, and even create human language. You can think of it as a bridge between how we communicate and how machines process data. It’s the smarts behind your phone's voice assistant and the reason your email inbox isn't completely buried in spam.

Ever tried to explain sarcasm to someone who takes everything literally? That’s pretty much the challenge we face with NLP. Human language is a beautiful mess of idioms, nuance, and context—things that computers, which are built on pure, unbending logic, have a tough time with.

NLP is the discipline focused on getting computers to work with and make sense of massive amounts of this messy human language. The end game is to make talking to our devices feel as natural as talking to another person.

At its core, NLP is all about making technology work for us, not the other way around. Instead of us having to learn rigid commands, NLP teaches machines to understand us on our own terms. This whole process hinges on a few key functions that work together to decode our language.

These functions are what turn a jumble of human text or speech into structured data a machine can actually use. So, when you tell your smart speaker to "play some relaxing music," NLP is what figures out your intent (play music) and the details (relaxing) to get the job done.

Natural language processing isn't just about understanding words; it's about understanding the intent and context behind them. It empowers machines to grasp meaning, sentiment, and relationships within language, moving beyond simple keyword matching to genuine comprehension.

The growth in this field is staggering. The global NLP market was valued at around USD 16.3 billion back in 2021 and is expected to rocket to USD 43.5 billion by 2027. This isn't just a niche tech trend; as you can see from recent market analysis, it's becoming fundamental to how we interact with technology every single day.

To really get what's happening under the hood, it helps to break NLP down into its main jobs. Think of these as the building blocks for any NLP-powered tool.

| Function | Description | Simple Example |

|---|---|---|

| Understanding | The machine's ability to read or hear language and parse its grammatical structure and meaning. | Identifying that "set a timer for 10 minutes" is a command with an action and a duration. |

| Interpreting | Going a step further to grasp the context, intent, and sentiment behind the words. | Recognizing that "I'd love another long meeting" is likely sarcastic based on context. |

| Generating | Taking structured information and creating human-like text or speech in response. | Crafting a summary of a news article or providing a spoken answer to a question. |

Ultimately, these functions don't work in isolation. They team up to power the tools we rely on, making technology feel a whole lot smarter and more intuitive.

To really get a feel for what natural language processing can do now, it helps to look back at where it started. The first attempts to get machines to understand us were a bit like trying to build IKEA furniture with a tiny, overly specific instruction manual. These early systems were built on what's called symbolic NLP, which meant humans had to sit down and painstakingly write out every single grammatical rule they could think of.

It sounds logical, right? But this rule-based approach ran into a huge problem: real human language is messy and loves to break the rules. It’s packed with slang, sarcasm, and weird exceptions that a rigid, dictionary-like system just can't compute. A classic example of this was the 1954 Georgetown-IBM experiment, which managed to translate about 60 Russian sentences into English using a fixed set of rules.

While it was a huge moment for the field, the experiment also showed just how massive the challenge was. The whole process was slow and incredibly fragile—it would fall apart the second it came across a phrase it hadn't been explicitly programmed to understand.

The real leap forward happened when researchers flipped their thinking on its head. Instead of trying to force-feed machines a dictionary, they decided to let the machines learn from language as it's actually used. This was the beginning of statistical NLP, which started picking up steam in the late 1980s and 1990s.

The idea behind it was simple but powerful. By feeding a machine huge amounts of text—we're talking books, articles, the early internet—it could start to figure out the patterns, probabilities, and connections between words all on its own. It didn't need a rule to know that "New York" is far more likely to be followed by "City" than by "pancake." This probabilistic approach was way more flexible and resilient.

Suddenly, NLP systems could handle the chaos of real language. They could make a good guess based on what was statistically likely, rather than crashing because they hit a rule they didn't have.

The big ideas behind NLP actually go back to the mid-20th century. Alan Turing’s famous 1950 paper, "Computing Machinery and Intelligence," really set the stage by asking if machines could think. Early milestones like the Georgetown-IBM experiment kicked off decades of research, which was first dominated by these symbolic, rule-based methods. You can walk through a detailed timeline of NLP's evolution to see how everything unfolded.

This shift from hand-coded rules to statistical learning really paved the way for the NLP we see today.

The most recent chapter in this story is all about neural networks and deep learning. These are computer systems designed to work a bit like the human brain, and they can process language with a level of nuance that older statistical methods just couldn't touch.

Instead of just counting how often words appear together, deep learning models can actually learn abstract concepts and the meaning behind the words. This is the magic that allows modern AI to understand not just what you typed, but what you were actually trying to say.

This whole journey—from brittle rules to statistical guesses, and now to deep, contextual understanding—is what took us from clunky, awkward machine translators to the smooth voice assistants and smart chatbots that are part of our daily lives. Each step closed the gap between how we talk and how machines compute.

To really get what’s happening inside an NLP system, think of it like a conversation. First, the computer has to listen and actually understand what you’re saying. Then, it needs to figure out how to give you a coherent, useful reply. This entire back-and-forth relies on two core components working together: Natural Language Understanding (NLU) and Natural Language Generation (NLG).

NLU is the "reading comprehension" part of the job. It’s where the machine takes messy, raw human language and translates it into structured data it can actually work with. NLG is the flip side—the "creative writing" part. It takes that structured data and spins it back into natural, human-sounding language.

Without both, the system is broken. NLU without NLG is like someone who understands every word you say but can't speak a single word back. And NLG without NLU? That’s like someone who can talk endlessly but understands absolutely nothing.

Natural Language Understanding is where the real analytical work happens. A machine has to meticulously pick apart a sentence to uncover its true meaning, and it's about much more than just dictionary definitions. It's about decoding grammar, context, and, most importantly, intent.

Let’s follow a simple request like, "Find a nearby coffee shop."

Tokenization: The very first thing an NLU system does is break the sentence into smaller pieces, or "tokens." So, "Find a nearby coffee shop" becomes ["Find", "a", "nearby", "coffee", "shop"]. This makes the language much easier to analyze piece by piece.

Part-of-Speech Tagging: Next, the system assigns a grammatical role to each token. It figures out that "Find" is a verb, "nearby" is an adjective, and "coffee" and "shop" are nouns. This helps it map out the relationships between the words.

Named Entity Recognition (NER): The system then scans for important, specific things. Here, it recognizes "coffee shop" as a particular type of place. This is the same process that helps a machine spot names, dates, and locations in a larger block of text.

Intent Classification: Finally, NLU puts it all together to figure out the user's goal. In this case, the intent is clearly a search for a location. The system now has a clean, structured command it can execute: [Action: Search], [Object: Coffee Shop], [Qualifier: Nearby].

This breakdown, from a simple human phrase into a machine-readable plan, is the magic of NLU.

Once the machine understands the request, Natural Language Generation kicks in to build a reply. NLG is the engine behind everything from a chatbot’s quick "Okay, here are some coffee shops near you" to writing entire articles from a set of data points.

Continuing our coffee shop example, the system now has a list of local options. NLG’s job is to present this information clearly.

The final output might be something warm and helpful, like, "I found three coffee shops within a mile. The closest is The Daily Grind, which is just around the corner."

Key Takeaway: Think of NLU as deconstruction—breaking language down to find its meaning. NLG is all about reconstruction—building meaningful language back up from structured data.

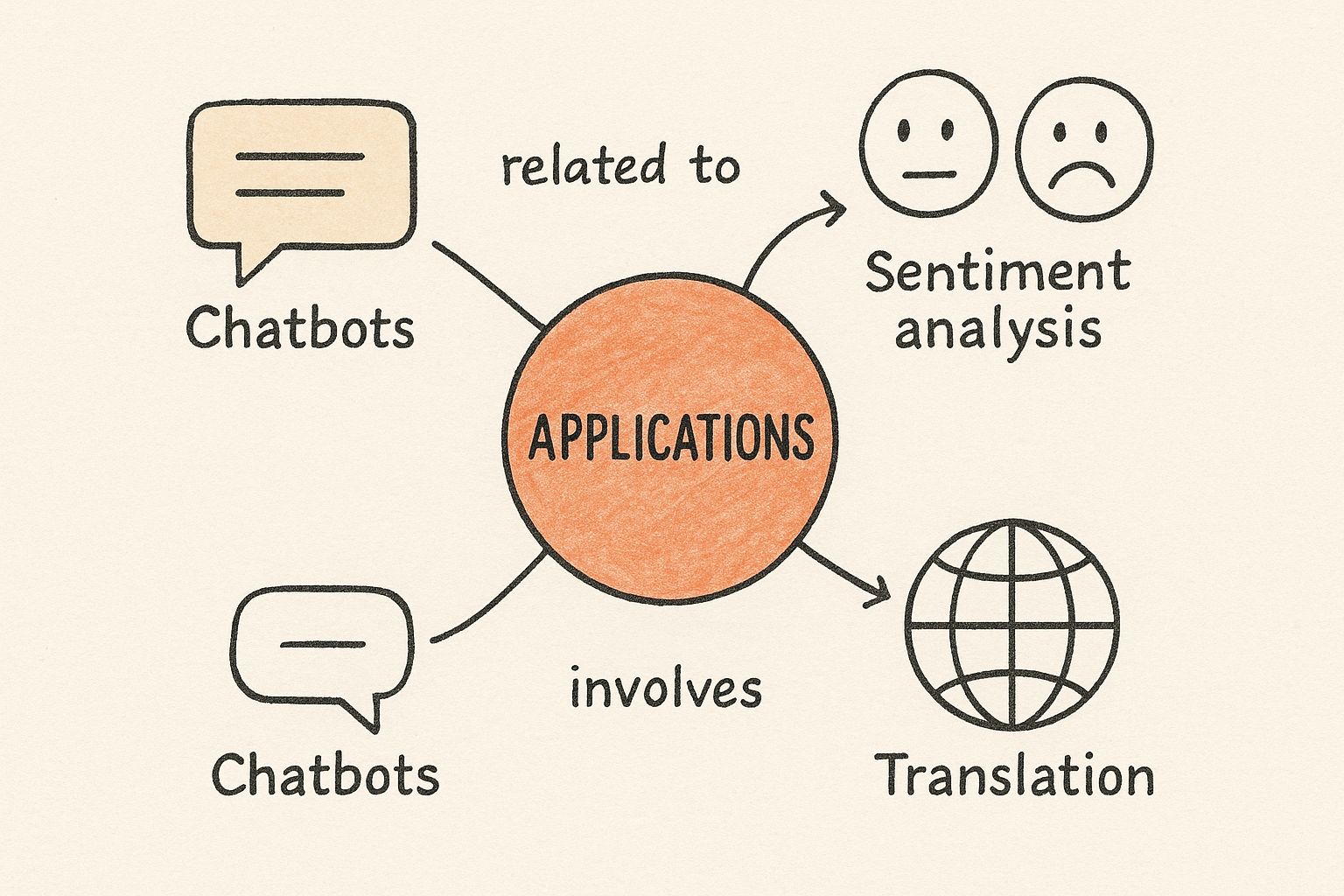

So many of the NLP tools we use every day are built on a handful of core techniques. Each one tackles a specific piece of the language puzzle, from sorting emails to understanding customer reviews.

Here’s a quick look at some of the most common ones.

| Technique | What It Does | Real-World Use Case |

|---|---|---|

| Sentiment Analysis | Determines the emotional tone (positive, negative, neutral) behind a piece of text. | Companies tracking brand perception on social media or analyzing customer reviews. |

| Topic Modeling | Scans a collection of documents to discover the main themes or topics that run through them. | A news site automatically grouping articles about politics, sports, and technology. |

| Text Summarization | Condenses a long article or document into a short, coherent summary. | Tools that create a "TL;DR" (Too Long; Didn't Read) version of a lengthy report. |

| Machine Translation | Automatically translates text from one language to another. | Google Translate converting a webpage from Japanese to English in real time. |

| Named Entity Recognition (NER) | Identifies and categorizes key entities like names, places, dates, and organizations. | A legal software that scans contracts to quickly find all company names and dates. |

These techniques are the building blocks that allow developers to create sophisticated applications that can interact with language on a surprisingly deep level.

As the image shows, whether it's powering a chatbot or running sentiment analysis, the core goal is always the same: make sense of human language and respond intelligently.

Modern NLP models aren't programmed with rigid grammar rules like in the old days. Instead, they learn by example—and lots of them. This means they need huge amounts of high-quality text and speech data to train on. The better and more diverse the data, the more nuanced the model's understanding becomes.

Before these models can learn anything, that data has to be carefully cleaned and structured. In fact, a huge amount of effort goes into preparing data for large language models. This prep work ensures the machine learns from clean, relevant, and unbiased information, which is absolutely essential for building reliable NLP tools.

It’s easy to think of natural language processing as some far-off, complex technology. But the truth is, you're interacting with it all the time, probably without even realizing it. NLP is the invisible engine running behind the scenes of so many of the digital tools you use from the moment you wake up.

Every time you type a query into Google, see a suggested reply in your email, or ask your smart speaker for the weather, you’re tapping into the power of NLP. It's not magic; it’s just incredibly good at understanding what you mean, making your technology feel less like a machine and more like a helpful assistant.

Let’s pull back the curtain and look at a few places where NLP is quietly making your life easier.

Think about the last time you checked your email. How did your inbox know to hide that "special offer" from a prince in your spam folder? That's one of the oldest and still most useful applications of NLP.

Spam Filtering: Sophisticated NLP algorithms scan every incoming email, looking for tell-tale signs of junk. They analyze the phrasing, check for suspicious links, and weigh countless other factors to keep your primary inbox clean and focused.

Smart Replies: When Gmail suggests a quick "Got it, thanks!" or "Sounds good to me," it's not just guessing. NLP analyzes the context of the message you received and offers up a few logical, time-saving responses.

Autocorrect & Predictive Text: This is a classic. Your phone’s keyboard isn't just correcting typos; it's using NLP to learn your unique communication style and predict what you're going to type next. That’s why it feels like it gets smarter the more you use it.

All these little conveniences are powered by NLP's ability to sift through massive amounts of text, spot patterns, and make incredibly accurate predictions.

Search engines have evolved far beyond simple keyword matching. Today, searching online is a masterclass in applied NLP, where the goal is to understand your intent, not just the literal words you typed.

When you search for something like "best pizza place open now," the search engine isn't just looking for pages with those words. It uses NLP to break down your request:

The real goal of modern search is to give you an answer, not just a list of blue links. NLP is what bridges the gap between your human question and the computer's vast index of information, allowing it to deliver exactly what you need.

This is the same core technology that lets you talk to Siri, Alexa, or Google Assistant. They take your spoken words, use NLP to figure out what you're actually asking for, and then execute the command.

Beyond just searching and typing, NLP has become a critical part of how we interact with businesses and consume media.

Many companies now rely on AI-powered chatbots for frontline customer support. These bots use NLP to understand your problem, pull relevant information from a knowledge base, and give you an instant answer. If things get too complex, they know when to hand you off to a real person, freeing up human agents for the trickiest issues.

And what about your downtime? Your favorite streaming services are putting NLP to work there, too.

These are just a few common examples, of course. To get a broader sense of how this technology is being used across different sectors, you can explore these powerful Natural Language Processing applications. Each one is a testament to how NLP has moved from a niche concept to an essential part of our digital lives.

For all its incredible progress, natural language processing still stumbles over the very things that make human language so rich and interesting. It's brilliant at tasks like instant translation or summarizing a massive report, but it gets tripped up by the subtle, messy, and deeply human parts of how we actually talk.

These aren't just minor bugs; they're fundamental puzzles that researchers are actively trying to piece together. Getting a handle on these hurdles gives you a much clearer picture of both where NLP is today and where it's heading tomorrow.

Let's face it, human language is built on ambiguity. A single word can have a dozen meanings, and we rely on context to instantly know which one is right. An NLP model, on the other hand, has to learn this skill from scratch, and it’s not always easy.

Think about the word "bank." When you read, "He sat on the river bank," you know exactly what that means. But in the sentence, "He deposited a check at the bank," it's something entirely different. For us, the surrounding words make it obvious. For an AI, this requires a deep contextual grasp that can be surprisingly difficult to nail down every single time. This is known as lexical ambiguity, and it's a persistent headache for developers.

The core issue is that NLP models don't know things in the way humans do. They operate on statistical patterns. When they see an ambiguous word, they're making a highly educated guess based on the billions of examples they've seen. Sometimes, that guess is just wrong.

This same confusion extends to entire sentences, which we call syntactic ambiguity. The classic example is the sentence: "I saw a man on a hill with a telescope." So, who has the telescope? Are you using it to see the man, or does the man on the hill have one? The sentence structure leaves it open to interpretation, forcing the NLP model to navigate a maze of possibilities.

One of the tallest hurdles for any AI is understanding what isn't explicitly said. Sarcasm, irony, and humor are all about the gap between what words literally mean and what the speaker intends them to mean. That requires a shared understanding of tone and social cues—things that are completely invisible in plain text.

When someone writes, "Oh, great. Another meeting," an NLP model is very likely to take that literally and tag the sentiment as positive. It completely misses the implied frustration that a person would pick up on instantly. Models are trained on the literal meaning of words, not the invisible layer of intent that colors our conversations.

Without the ability to detect this kind of nuance, an AI can seriously misinterpret user feedback, social media posts, and customer reviews, leading to some pretty skewed results.

This is probably the most serious challenge facing the field: algorithmic bias. NLP models are, in a very real sense, a reflection of the data they were trained on. If that data—pulled from books, articles, and the wider internet—is full of human prejudices related to gender, race, or culture, the model will learn and often amplify those biases.

This isn't just a theoretical problem; it has real-world consequences.

Fixing this requires a massive effort to carefully curate training data and develop new techniques to audit and correct for bias in AI models. It’s a complex, ongoing fight to make sure that as we build more powerful NLP systems, we aren't also encoding our own prejudices into the technology of the future.

https://www.youtube.com/embed/LHX4J91Xw_U

The world of natural language processing is moving at a breakneck speed. We're quickly shifting beyond simple commands and into a new territory of genuine, fluid conversation between people and machines.

The line is blurring, and it's happening largely because of incredibly powerful large language models (LLMs). These aren't just small steps forward; they represent a fundamental change in how we'll interact with technology in our daily lives.

For decades, we’ve had to learn the machine's language. Now, machines are finally becoming fluent in ours. This opens up a future where technology feels less like a rigid tool and more like an intuitive partner, one that can grasp complex instructions and carry on a real conversation.

The next great leap for NLP is all about context and memory. Our current voice assistants are pretty handy, but they have a terrible short-term memory, often forgetting what you said just a sentence ago. The next generation of AI assistants will be different. They will remember your past conversations, learn what you like, and even start to anticipate your needs.

Think about giving an assistant a command like this: "Plan a weekend mountain trip for me and two friends. Find a pet-friendly cabin with a great view, book it for us, and then find a few easy hiking trails nearby." Accomplishing that requires the AI to juggle multiple tasks, make decisions based on context, and connect with different services. That's no longer science fiction; it's right around the corner.

The ultimate goal is to make interacting with data as simple as having a conversation. This move democratizes access to information, allowing anyone to ask complex questions of a database using plain English instead of specialized query languages. The implications for business intelligence and research are enormous.

We're already seeing this in action. Companies are starting to use NLP that lets people ask questions of huge databases just by talking or typing. This removes the barrier of needing to know a query language like SQL, putting powerful data analysis capabilities into the hands of a lot more people.

Beyond just being better assistants, advanced NLP is poised to change how we find information and even how we create. The amount of new information produced every day is staggering—far more than any person could ever hope to process.

Here’s a glimpse of what’s starting to happen:

Ultimately, understanding what is natural language processing is about seeing its potential to unlock human creativity and intelligence. As these systems grow more sophisticated, they will weave themselves into our lives, not just as tools, but as collaborators helping us tackle bigger challenges and explore new ideas. The conversation is really just getting started.

As we've journeyed through the world of NLP, a few questions tend to pop up again and again. Let's tackle some of the most common ones to help clarify how NLP fits into the bigger picture of AI and how it deals with the beautiful complexity of human language.

It’s easy to get these terms tangled up since they're often mentioned in the same breath. The best way to think about them is like a set of Russian nesting dolls.

Artificial Intelligence (AI) is the biggest doll on the outside. It's the whole field of building machines that can do things we typically associate with human intelligence, from recognizing faces to playing complex games.

Machine Learning (ML) is the next doll inside. ML is one of the most important ways we achieve AI. Instead of giving a computer a rigid set of instructions, we feed it a ton of data and let it figure out the patterns for itself.

Natural Language Processing (NLP) is an even smaller, more specialized doll. It’s a subfield of AI that uses machine learning techniques to solve one specific, massive challenge: understanding and generating human language.

So, you can see how they fit together. AI is the ultimate goal, ML is a powerful technique to get there, and NLP is a specific use of that technique focused squarely on language.

Not at all. While a formal CS degree certainly doesn't hurt, it’s definitely not a prerequisite for getting your hands dirty with natural language processing. The field has opened up dramatically in recent years, thanks to an explosion of great online resources and user-friendly tools.

Frankly, passion and a drive to build things often count for more than a specific diploma. Some of the most creative NLP professionals I know have backgrounds in linguistics, data science, or even philosophy. Their different ways of looking at language are a huge asset.

The most important thing is a genuine curiosity and a willingness to just start building. With tons of free tutorials, open-source libraries like NLTK and spaCy, and vibrant online communities, there has never been a better time to dive in. Your first small project will teach you more than you can imagine.

This is one of the toughest nuts for NLP to crack. Most of the powerful models you hear about are trained on absolutely gigantic datasets. The problem is, most of that data is in widely spoken languages like English, Mandarin, or Spanish.

This leaves a big hole. Languages with less available digital text are called "low-resource" languages, and building good NLP tools for them is a major challenge. The models just don't have enough examples to learn from.

Researchers are working on some pretty smart ways to close this gap:

The mission is to build technology that is fair and accessible to everyone, no matter what language they speak. It's a work in progress, but it's essential for making NLP a truly global tool.

Ready to integrate powerful and affordable language AI into your next project? Lemonfox.ai offers a cutting-edge Speech-To-Text and Text-to-Speech API that makes high-quality transcription and voice generation accessible to everyone. Get started with our free trial and see how easy it is to bring your application to life at https://www.lemonfox.ai.